Goodbye, messy data: An engineer’s guide to scalable data enrichment

In any enterprise application, user-provided data is often messy and incomplete. A user might sign up with a “company name,” but turning that raw string into a verified domain, enriched with key technical or business contacts, is a common and challenging data engineering problem.

For many development teams, this challenge often begins as a seemingly simple request from sales or marketing. It quickly evolves from a one-off task into a recurring source of technical debt.

The initial solution is often a brittle, hastily written script run manually by an engineer. When it inevitably fails on an edge case or the API it relies on changes, it becomes another fire for the on-call developer to extinguish: a costly distraction from core product development.

From an engineering leader’s perspective, this creates a classic dilemma. Dedicating focused engineering cycles to build a robust internal tool for data enrichment can be hard to justify against a product roadmap packed with customer-facing features.

Yet, ignoring the problem leads to inaccurate data, frustrated business teams, and a drain on engineering resources from unplanned, interrupt-driven work. The ideal solution is a scalable, resilient system that can be built and maintained with minimal overhead, turning a persistent operational headache into a reliable, automated internal service.

Sign up for The Replay newsletter

Sign up for The Replay newsletter

The Replay is a weekly newsletter for dev and engineering leaders.

Delivered once a week, it’s your curated guide to the most important conversations around frontend dev, emerging AI tools, and the state of modern software.

Solving this at scale requires a robust, fault-tolerant, and cost-effective pipeline.

This post will guide you through building such a data enrichment workflow. We’ll move beyond simple lead generation and frame this as a powerful internal tool for enterprise use cases like:

- Powering go-to-market teams: Build an automated service that enriches new user sign-ups with verified company data, directly piping actionable intelligence into the tools your sales and support teams use every day.

- Strategic talent sourcing: Feed a list of target companies and identify senior engineering talent to build a high-quality recruitment pipeline.

- Tech partnership development: Identify the right product and engineering contacts at potential partner companies to streamline integration discussions.

We’ll orchestrate this entire process using n8n, a workflow automation tool that shines in complex, multi-step API integrations. Our backend will be NocoDB, an open-source Airtable alternative that provides a proper relational database structure. Here’s the entire workflow if you’d like to see.

Let’s first dive into the architecture.

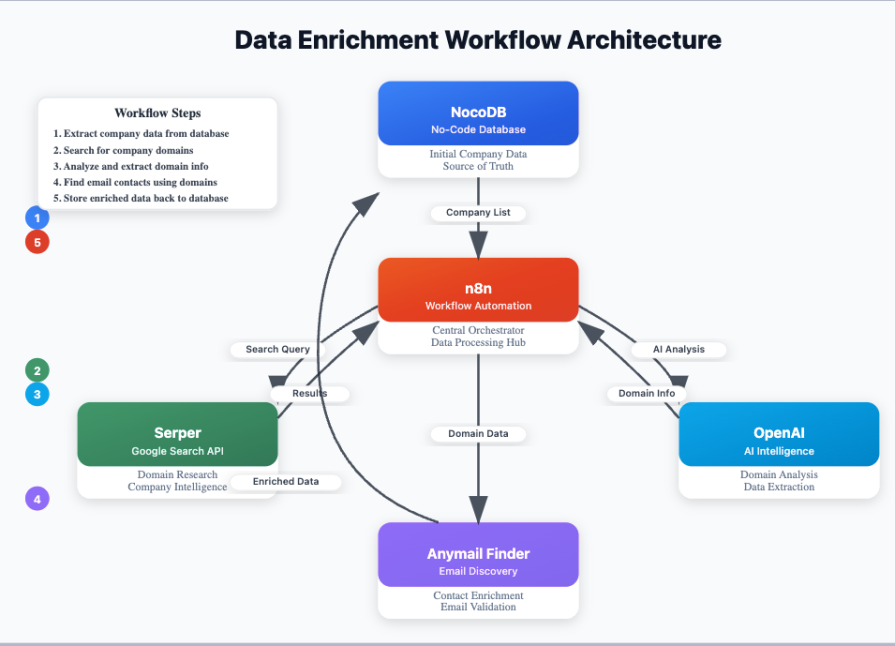

Architecting the solution: The tech stack

A scalable pipeline relies on specialized tools for each part of the process. Here’s our stack:

- Orchestration engine (n8n): The brain of our operation. n8n will execute the step-by-step logic, handle API calls, manage error handling, and orchestrate data flow between services.

- Database (NocoDB): Our data backend. We use NocoDB over a simple spreadsheet because it allows us to create relational links between tables (e.g., a company can have many contacts), providing a structured, scalable foundation.

- Domain search (Serper.dev API): A low-cost Google Search API to find potential website URLs associated with a company name.

- Intelligent filtering (OpenAI GPT API): An LLM to parse the search results and intelligently identify the correct official company domain, filtering out social media profiles, news articles, and other noise.

- Contact discovery (Anymail Finder API): An API to find specific decision-makers (e.g., engineering, marketing, CEO) and generic company emails based on a verified domain:

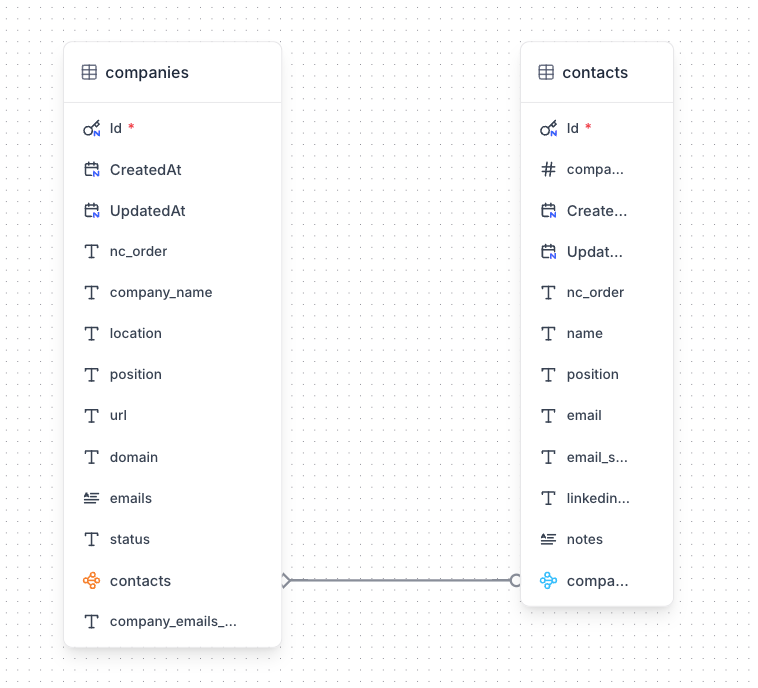

Data modeling in NocoDB

Before building the workflow, we need a solid data model. In NocoDB, we’ll set up two tables:

Companiestable: This table holds the initial list of company names and will be enriched with the data we find:company_name(Text)location(Text)url(Text): The final, validated URL.domain(Text): The extracted domainfallback_emails(Text): For generic company emailsstatus(Text): A state field to track progress (e.g.,Domain Found,EmailsFound (Risky),Completed): This is crucial for making the workflow resumablecontacts(Link tocontactstable): A “Has Many” relationship

Contactstable: This will store the individual decision-makers we find:name(Text)position(Text)email(Text)email_status(Text) – e.g.,validorrisky.linkedin_url(Text)company(Link toCompaniestable) – The “Belongs To” side of the relationship

This relational structure is far superior to a flat file or spreadsheet, as it correctly models the one-to-many relationship between a company and its contacts, preventing data duplication and inconsistencies:

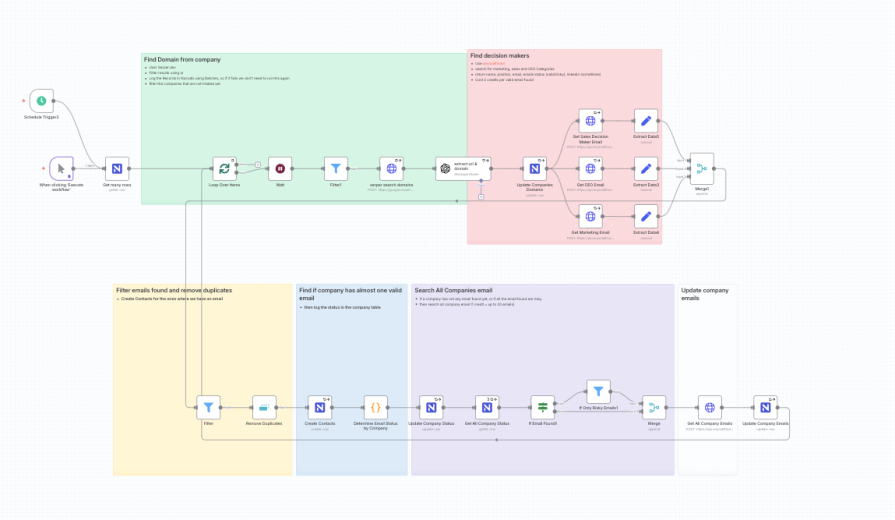

Implementing the n8n workflow, step-by-step

Our n8n workflow processes data in logical phases, designed for resilience and scalability.

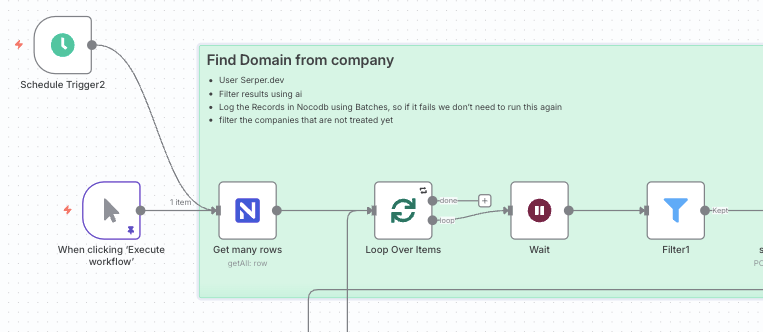

Phase 1: Data ingestion and batching

The workflow starts by fetching unprocessed companies from our NocoDB database.

- Trigger: Can be manual (

Startnode), scheduled, or triggered by a webhook for real-time processing. - Fetch companies (

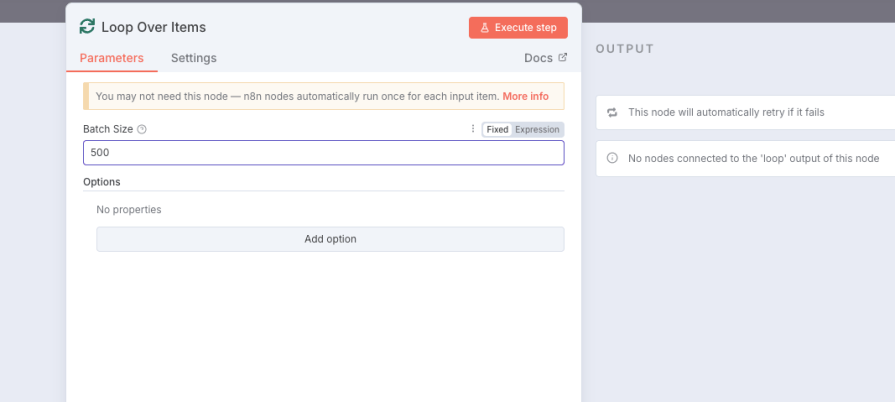

NocoDBnode): The first step is aGet Manyoperation on theCompaniestable. We add a filter to only retrieve records where thestatusfield is empty. This simple check makes the entire workflow idempotent and resumable. If it fails midway, we can restart it without reprocessing completed entries. - Loop (

Loop Over Itemsnode): To handle a large volume (e.g., 8,000+ companies) without overwhelming downstream APIs, we wrap the core logic in a loop that processes records in batches (e.g., 500 at a time) with aWaitnode between iterations to respect rate limits:

Phase 2: Domain discovery and AI validation

This is where we turn a simple company name into a verified website domain.

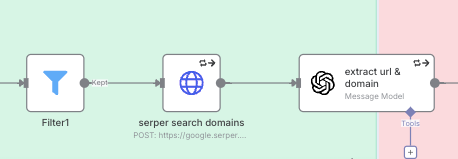

- Search for URLs (

HTTP Request node): For each company, we make aPOST requestto the Serper.dev API. The query combines thecompany_nameandlocationto get relevant Google Search results. This returns an array of potential URLs (organicresults). - Intelligent validation (OpenAI node): The search results are often noisy, containing links to social media, directories, or news. We feed this array of URLs, along with the original company name, to the GPT-4.1-mini model with a carefully engineered prompt. The prompt instructs the model to act as a data analyst, identify the single most likely official company website, and return a structured JSON object containing the chosen URL, the extracted domain, and an explanation. This is a perfect use case for an LLM; it performs a complex, non-deterministic filtering task that would be brittle and difficult to implement with regex or rule-based logic.

- Update company record (

NocoDBnode): We then perform anUpdateoperation in ourCompaniestable using the company’s ID. We populate theurlanddomainfields from the OpenAI output. We also update thestatusfield using a ternary expression:$domain ? 'Domain Found' : 'Domain Not Found':

Phase 3: Contact enrichment and data persistence

With a verified domain, we can now find key personnel:

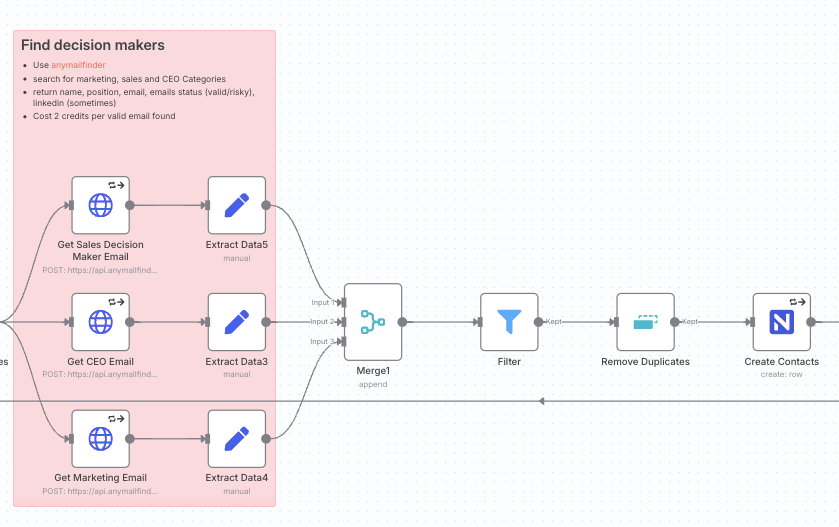

- Parallel contact search (

HTTP Requestnodes): We want to find contacts in several departments (e.g., Sales, Marketing, and CEO). Instead of running these searches sequentially, we branch the workflow to run three Anymail Finder API calls in parallel for maximum efficiency. Each node searches for a different decision-maker category. The API is queried using the domain if available; otherwise, it falls back to the company name. - Aggregate and deduplicate (

Merge andRemove Duplicatesnodes): The results from the three parallel branches are combined using aMergenode. It’s possible for one person to fit multiple categories (e.g., a CEO at a startup might also be the head of sales), so we use aRemove Duplicatesnode to ensure each contact is unique. - Create contact records (

NocoDBnode): We iterate through the cleaned list of contacts and execute aCreateoperation on ourContactstable in NocoDB. For each contact, we map the fields (name,position,email, etc.). Critically, we link this new contact back to its parent company by setting thecompany_idfield. This populates the relational link we defined in our data model:

Phase 4: Fallback logic and finalization

What if no decision-makers are found, or their emails are all “risky”? We need a fallback plan.

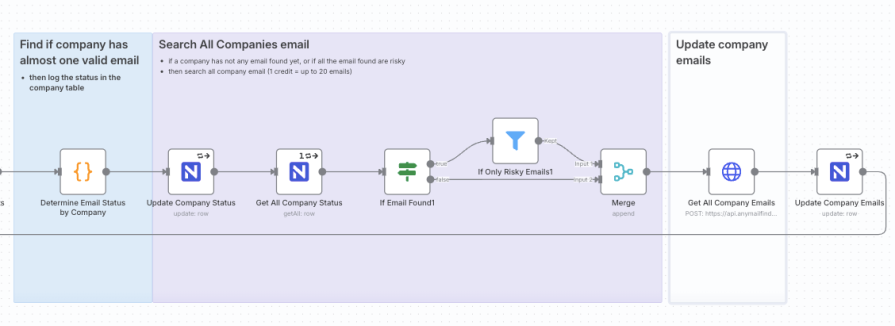

- Check for valid emails (

Codenode): After the contact creation step, a small JavaScript snippet in aCodenode analyzes the results for the current company. It checks if at least one contact with avalidemail status was found and outputs a simple boolean flag,has_valid_email. - Conditional logic (

Ifnode): AnIfnode routes the workflow based on the results. If no emails were found, or if all found emails wererisky, we proceed to the fallback branch. Otherwise, the job for this company is done. - Fetch all company emails (

HTTP Requestnode): For companies needing a fallback, we make one final call to a different Anymail Finder endpoint (/v2/company/all-emails/json). This fetches up to 20 generic and personal emails associated with the domain (e.g.,contact@, sales@). This ensures we always get some contact information. - Final Update (

NocoDBnode): We update theCompaniestable one last time, populating thefallback_emailsfield with a comma-separated list of the emails found in the previous step and setting the finalstatus:

Engineering for scale and resilience

This workflow isn’t just a script; it’s engineered for production use. Here are the key principles that make it robust:

- Idempotency and resumability: By filtering for unprocessed records at the start, the workflow can be stopped and restarted safely. It will pick up where it left off without duplicating work or API calls.

- Batch processing: The main loop processes the master list in manageable chunks, preventing memory issues and allowing for periodic data saving.

- Rate limiting: The explicit

Waitnode in the loop and the built-in batching options in theHTTP Requestnodes ensure we don’t violate API rate limits, which is critical for cost management and stability. - Error handling: Every API call node is configured with Retry on Fail (e.g., 3 retries with a 1-second delay). This handles transient network errors gracefully. For critical failures, n8n can be configured to trigger a separate error-handling workflow for alerting.

Key takeaways for engineering leaders

Building a system like this is more than just a data-cleaning exercise; it’s an investment in your team’s efficiency and a strategic asset for the business. Here are the key takeaways for engineering leaders considering a similar project:

- Turn engineering from a cost center into a value driver: This architecture transforms a manual, reactive task that consumes developer time into an automated internal service that directly powers go-to-market teams. By providing clean, enriched data, engineering becomes a proactive partner in driving revenue and operational efficiency, rather than a support desk for data-related fire drills.

- Orchestrate, don’t just script: The biggest lesson learned from similar projects is that simple scripts don’t scale. They lack error handling, state management, and observability. Using an orchestration tool like n8n provides these production-ready features out of the box. This is the crucial trade-off: a small upfront investment in a proper workflow tool saves countless hours of building and maintaining a fragile, custom solution. It’s the difference between a temporary fix and a durable system.

- Embrace idempotency and state management: The single most important principle that prevents this pipeline from breaking at scale is its ability to be safely stopped and restarted. By tracking the status of each record in NocoDB, the workflow is idempotent—it can run multiple times without creating duplicate data or re-running expensive API calls. This is essential for handling the inevitable failures that occur in any distributed system.

By adopting this service-oriented mindset, you can solve a persistent business problem while building a resilient, scalable asset that frees up your most valuable resource: your engineering team’s time.

Alexandra Spalato runs the AI Alchemists community, where developers and AI enthusiasts swap automation workflows, share business insights, and grow. Join the community here.

The post Goodbye, messy data: An engineer’s guide to scalable data enrichment appeared first on LogRocket Blog.

This post first appeared on Read More

Sign up for The Replay newsletter

Sign up for The Replay newsletter