Where should AI sit in your UI?

Mapping emerging AI UI patterns and how spatial UI choices shape AI experiences

The rise of large language models (LLMs) and AI agents has supercharged familiar UI patterns like chatbots, but a new wave of interface layouts is beginning to take shape. The real opportunity now lies in embedding AI more deeply into sophisticated, task-oriented interfaces. From right-panel assistants and infinite canvases to semantic spreadsheets, these spatial choices are more than just design decisions, they fundamentally shape how users discover, trust and interact with AI.

This article explores seven emerging UI layouts and the perceived roles of AI agent, analysing how each one influences user behaviour and expectations through discoverability, user interaction patterns and agent capabilities in the experience.

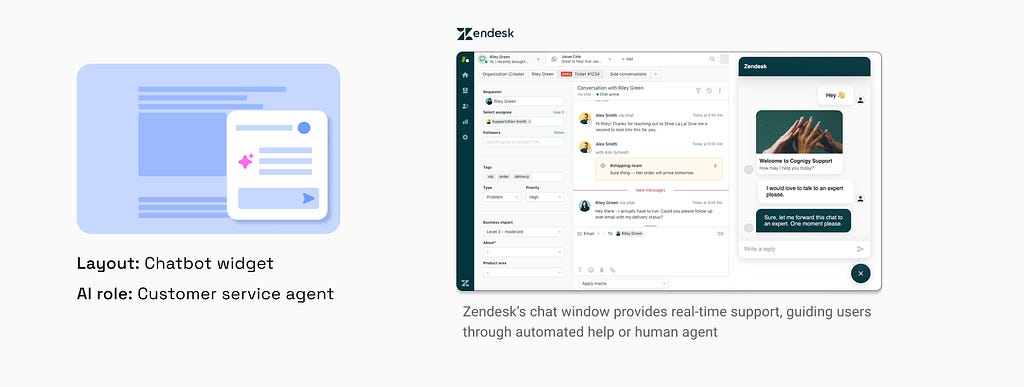

1. Customer service agent in a chatbot widget

The floating chatbot in the bottom-right corner has long been a familiar and standard entry point for customer support, exemplified by platforms like Zendesk and Intercom.

- Discoverability: The bottom-right placement makes it easily discoverable without being intrusive, serving as a subtle yet consistent visual element.

- Interaction pattern: Chatbot widget functions as a secondary asynchronous interface. Users can open or close the window as needed, making it ideal for lightweight, non-intrusive support moments.

- Agent’s perceived role and capabilities: AI’s role expectation remains task-bounded and reactive. While perviously limited to rule-based flows, modern AI chatbots now maintain contextual memory, personalize responses, and automate backend actions like order lookups or password resets all without human intervention.

- Limitations: They are not suited for proactive, multi-step reasoning or creative co-workflows because of limited real estate.

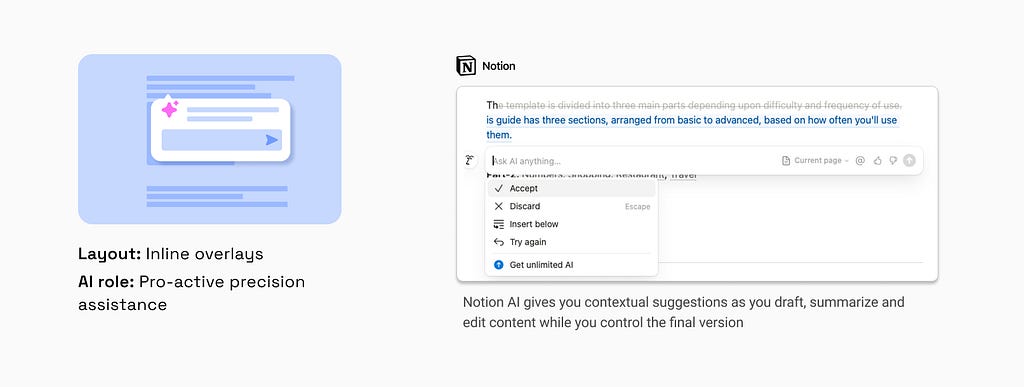

2. Inline overlay prompts for precision assistance

Inline overlay prompts, such as in Notion and Grammarly AI, function as interactive, context-sensitive suggestions or actions that appear directly within the text or content area, enhancing user productivity and editing efficiency

- Discoverability: Inline prompts typically surface through subtle cues like underlines, hover icons, or context popovers and are often triggered by user actions (e.g., typing, hovering, selecting).

- Interaction pattern: Inlay prompts are a dynamic augmentation tool. Users interact with suggestions immediately, accepting, editing, undoing or regenerating content inline. The low-friction interface keeps the user in control while maintaining task continuity.

- Agent’s perceived role and capabilities: The agent is viewed as a “precision assistant”, offering micro-level support — rephrasing sentences or summarising paragraphs, or completing code snippets based on the user’s real-time input and intent. E.g. Grammarly’s inline prompts have a high degree of contextual awareness. They analyse the text you are currently writing, including the tone, style, audience, and purpose, to tailor suggestions and generate content.

- Limitations: Inline agents are not effective for open-ended creative exploration or tasks that require broader contextual reasoning or multi-step logic.

3. Creative collaborator on an infinite canvas

The concept “Infinite Canvas” was popularized by Scott McCloud in his 2000 book Reinventing Comics, where he proposed that digital comics could break free from the constraints of paper pages, allowing storytelling on an infinite canvas in any shape or direction.

Building on this idea, infinite canvas tools have evolved into digital applications that offer users a virtually limitless two-dimensional workspace. Tools like TLDraw, Figma, and Miro embrace the infinite canvas model to support visual thinking and exploratory workflows.

- Discoverability: AI capabilities are surfaced through contextual triggers such as when user hovers over or selects a canvas object like sticky notes, text, shapes.

- Interaction pattern: Users can call on the model through localized, asynchronous prompt inputs for specific elements (e.g., generate text, rename layers, suggest layouts) without interrupting the broader creative flow. Moreover, unlike chat-based interactions, multiple parallel LLM calls can coexist across the canvas. E.g Make.TLDraw, users can add sketches, text prompts and labels directly into the canvas and invoke AI multi times on the surrounding content.

- Agent’s perceived role and capabilities: Here, AI acts as a “creative collaborator”, responding to spatial context rather than linear flow. It supports tasks like idea generation, content refinement, layout suggestions, and visual augmentation treating the canvas itself as a prompt surface.

- Limitations: This approach is suboptimal for scenarios needing strict version control when building an app on Lovable or document-wide awareness like that on Grammarly. Canvas-based agents typically operate on local clusters of objects making it harder to be contextually aware of entire workspace.

4. Center stage general-purpose assistant

This interface model puts the AI at the center of the user experience , typically as a full-width, vertically stacked pane anchored by a text input and conversational thread. Common in tools like ChatGPT, Perplexity, and Midjourney (via Discord), it supports a prompt-first, freeform interaction style.

- Discoverability: The interface is intentionally minimal, with a focus on the input box. The user initiates interaction through natural language, often guided by placeholder text, past chats, or examples to spark exploration.

- Interaction pattern: Prompting is the core mode of interaction. Users drive the session through open-ended queries, refining outputs iteratively via follow-ups, clarifications, or prompt engineering. It supports flexible pacing and self-directed exploration.

- Agent’s perceived role and capabilities: The AI is framed as a “general-purpose assistant”, capable of answering questions, generating content, and performing tasks across diverse domains like writing, coding, design, or research. Its perceived strength lies in breadth, adaptability, and creative support.

- Limitations: Not ideal for structured, multi-step agentic workflows like app building, form completion, or long-context decision-making

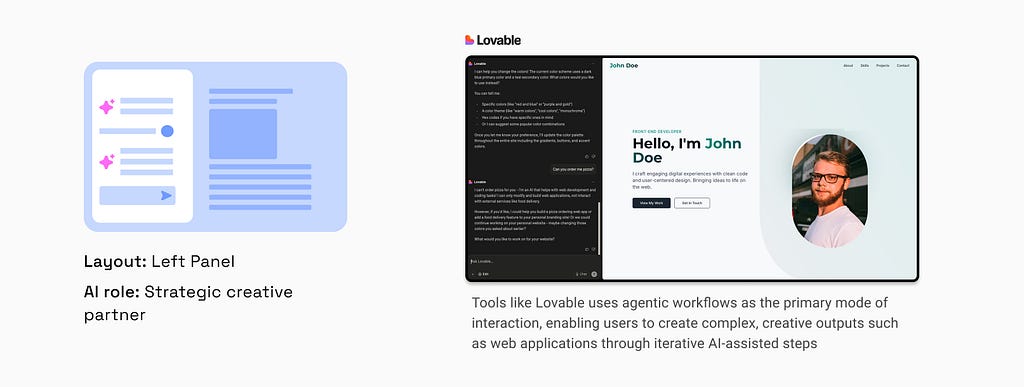

5. Strategic creative partner on left panel

In this layout, AI assistant is placed in a persistent left-side panel, acting as a collaborative engine for generating content, code, or structured output.

Tools like ChatGPT Canvas and Lovable adopt this design to support complex, iterative workflows.

- Discoverability: To emphasise co-creation, placing the chat window on the left panel aligns with the dominant left-to-right “F-shaped” scan . This panel typically houses the chat interface, prompt controls, and session memory, making its capabilities continuously accessible.

- Interaction pattern: This layout supports multi-turn co-creation. Users prompt, refine, and test outputs in an ongoing feedback loop, with changes reflected in the adjacent right-side workspace. The separation between chat and output encourages a conversational design process

- Agent’s perceived role and capabilities: The AI is viewed as a “strategic partner or co-creator”, helping users ideate, structure, and evolve complex outputs through back-and-forth interaction. These agents often demonstrate advanced reasoning and planning to offer meaningful, context-aware assistance.

- Limitations: Less effective for lightweight tasks or os using it on mobile interfaces due to screen space constraints. Vague prompts may lead to unintended and invisible behaviours (Eg. bugs in AI code-generator tools like Lovable).

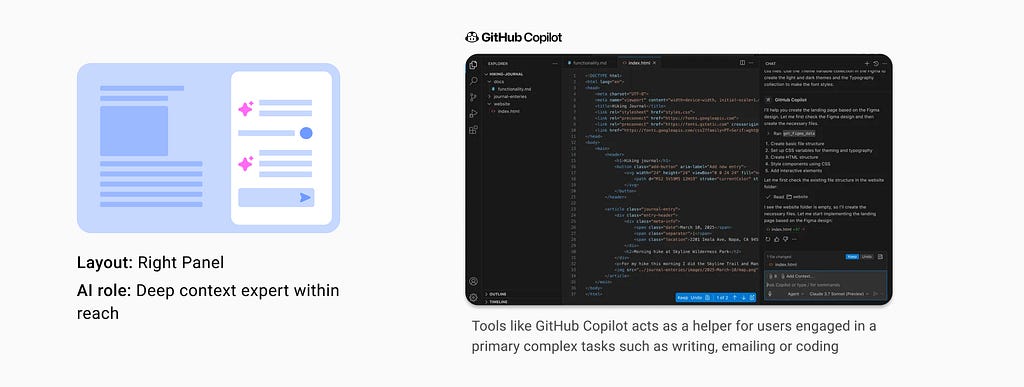

6. Deep context expert on right panel

This layout positions the AI in a collapsible right-hand panel, functioning as an on-demand assistant for users immersed in complex primary tasks. Examples include Microsoft Copilot in Office apps, GitHub Copilot, Gmail Gemini or Cursor.

- Discoverability: Placed in the peripheral right-side panel, allows users to stay focused on the central canvas while keeping the assistant easily discoverable and non-intrusive.

- Interaction pattern: AI can be invoked by the user at specific moments to assist with tasks like summarizing, drafting, transforming, or debugging. Unlike proactive overlays, it respects user pacing and provides help only on request.

- Agent’s perceived role and capabilities: The agent is perceived as a “deep-context expert”, capable of delivering targeted, reasoning-driven support — such as auto-generating presentations, managing communications, or supporting enterprise-level coding without dominating the experience.

- Limitations: Less suited for AI-first experiences where where prompting is central. Its subtle presence may go unnoticed by novice users.

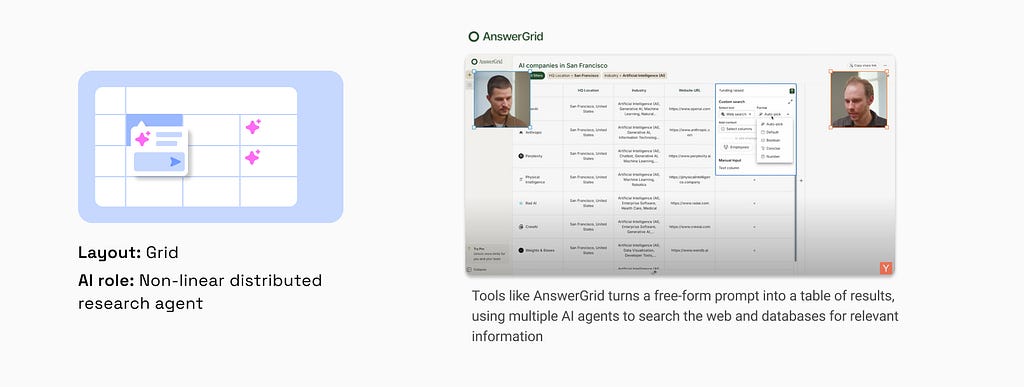

7. Distributed research agent in grid interfaces

A new paradigm is emerging where the traditional spreadsheet grid becomes an intelligent surface for querying, research, and semantic interaction. Tools like AnswerGrid and Elicit transform familiar tabular layouts into dynamic, agent-driven systems for data generation and synthesis. John Maeda has discussed a vision for “semantic spreadsheets”, where the spreadsheet is not just a static grid of formulas, but a dynamic network of cells that understand content and context. In this model, each cell behaves somewhat like an intelligent agent within the spreadsheet.

- Discoverability: These interfaces retain the classic spreadsheet layout having rows, columns, and cells. AI input typically begins with a top-level prompt or query field, after which cells populate autonomously with structured results.

- Interaction pattern: Unlike linear Q&A or chat-based flows, users in AnswerGrid interact by seeding prompts or refining column definitions. Behind the scenes, multiple AI agents work asynchronously scraping data, parsing sources, and populating the grid dynamically. The spreadsheet itself becomes the prompt canvas and output viewer.

- Agent’s perceived role and capabilities: AI can be described as “distributed research agent” embedded within the table. In tools like AnswerGrid, the model fetches live data across the web, filling each cell contextually. Elicit builds “living documents” from scientific literature, automating synthesis across rows. Each cell behaves as a mini-agent contributing to an intelligent whole.

- Limitations: These systems are emerging interfaces and seems like are best suited for research, analysis, or structured content compilation. They require users to have clarity on the structure of their desired output, and may overwhelm those unfamiliar with spreadsheets or data curation workflows.

Conclusion

LLMs aren’t just tools to be queried, they’re a new computing medium, one that we’re only beginning to understand. Just as GUIs, the web, and mobile interfaces reshaped design in past decades, LLMs demand a rethinking of how intelligence lives in our products, not just what it says, but where it sits, how it’s triggered, and how it guides the user.

Spatial layouts like chatbots, side panels, semantic grids, infinite canvases aren’t aesthetic choices. They define the mental model users form about the AI’s role and it’s placement shapes usability, capabilities and trust. As we enter this new era, designing how AI is surfaced will be just as critical as designing what it can do.

Where should AI sit in your UI? was originally published in UX Collective on Medium, where people are continuing the conversation by highlighting and responding to this story.

This post first appeared on Read More