A PM’s guide to handling product crises like a pro

Someone once told me that product management is like being a firefighter who also happens to build houses. You spend time making something beautiful, then suddenly everything is on fire, and you need to switch gears completely. Not to mention when you’re building additional rooms, while others are burning, and you keep running between the two with your hammer and a bucket of water.

No matter how polished your roadmap is or how well you anticipate risks, a crisis will happen: a critical bug will take down your main feature, a security breach will expose user data, a viral complaint will spiral into a PR nightmare, etc.

When disaster strikes, every second counts, and your response determines whether you emerge stronger or watch your product’s (and your) reputation crumble.

The problem is that most PMs aren’t prepared for full-blown crisis scenarios until they’re already in the middle of one. By then, the damage is unfolding in real time. That’s why you need a clear, repeatable plan for managing high-stakes incidents before they escalate into disasters.

Let me walk you through what every product person needs to handle product crises effectively, minimize damage, and maintain user trust. The advice I’m about to share comes from years of figuring this out the hard way.

Common crisis types and how to handle them

Responding quickly requires you to be able to identify common crises when they first emerge so that you can immediately start implementing a solution. Some of the biggest examples include:

Major bugs or downtime

This is when a core feature stops working, preventing users from accessing your product or its key features.

In this case, the key priorities include triaging and rolling back the faulty update if possible, communicating the scope of impact, and providing a workaround if a fix isn’t immediate.

You should be able to prevent at least some of those issues by improving testing coverage and setting up better alerting systems to catch issues before they reach production. Catching and fixing a bug before it’s released is 1000 times cheaper than when it hits production.

Security breaches or data leaks

This type of crisis happens when user data is exposed due to a security vulnerability.

When it happens, you and your team need to dedicate time to immediately containing the breach by shutting down affected systems, engaging security, legal, and compliance teams immediately, and notifying affected users and regulators within the required legal timeframes.

It goes without saying that you also need to patch the vulnerability before it can be exploited again.

If you don’t want to deal with this type of crisis, conduct security audits, enforce stricter access controls, and implement automated monitoring for anomalies.

PR and social media crises

Finally, your product manager’s day can be ruined by a viral complaint that spreads misinformation about (or sheds light on an issue in) your product.

In that case, you need to assess whether the criticism is valid, acknowledge concerns publicly even if internal discussions are ongoing, and correct false claims with facts without being confrontational. Having a public dispute with a user can add gasoline to a fire, even if you’re (technically) right.

To prevent a random post from going viral, you can invest in having a dedicated social listening team and pre-approved response templates for common scenarios.

Planning for the immediate disaster

The worst time to create a crisis plan is during the crisis itself. When something breaks, whether it’s a critical bug, security incident, or reputation hit, your team needs a predefined response process to follow.

Back in the university days, I was told that a smoking engineer is always more efficient when faced with a dire problem than a non-smoking one. The non-smoker will jump into action and will behave like a headless chicken. The other engineer will have time to think while getting a cigarette, thus approaching the “fire” with a plan and a level head.

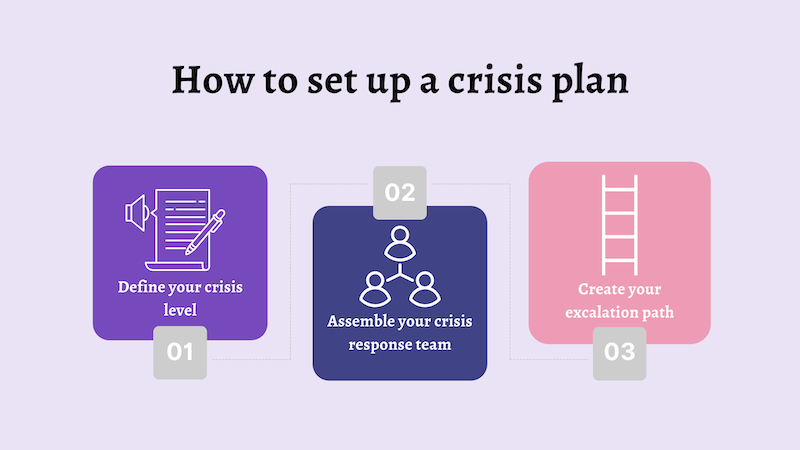

To set up a crisis plan, follow these three key steps:

1. Define your crisis levels

Not every issue requires a full-scale emergency response. You need clear criteria for what qualifies as a real crisis based on business impact, security risk, and user exposure.

I recommend three tiers:

- Critical (P0) — Data breach, major outage, or compliance risk. Immediate response required. In other words, either the product can’t be used or it’s losing money (including opportunity) here and now. Get your “smoke” and get to work asap

- High (P1) — Severe bug affecting core functionality. Address within hours. No need to wake up your on-call team, but there’s no time to spare. In the P1 scenario, it’s good to at least communicate that the team is aware of the problem and will provide resolution updates as they appear

- Medium (P2) — Usability issue or performance lag that needs fixing, but not urgently. Just prioritize this in your backlog

I’ll readdress identifying an actual crisis later in the article. For now, let’s assume we are dealing with P0.

2. Assemble your crisis response team

Depending on the time of day and vacation season, you’ll either have the right people that know what they need to be doing or you’ll need to assign clear roles. Ideally, this should be done long before a crisis hits.

Your crisis response team should include:

- PM (you) — Drives decisions and aligns teams

- Engineering lead — Owns the investigation and resolutions, helps to identify other parties (from different teams) that’ll be needed to clear a live P0 issue

- Communications — This person will be in charge of updating stakeholders about the progress and resolution prognosis

- Developers, QA, release managers — Probably the same people that you work with regularly day to day. With this, it’s more a case of “can we release a fix fast if needed” rather than specific people

3. Create your escalation path

If an issue is bigger than your team can handle, when do you escalate to executives? Or to legal and regulators?

Set communication thresholds. For example, a P0 incident automatically triggers an executive briefing and legal review. No exceptions.

Having this point nailed comes down to a few workshops with the team, where you speculate what can potentially go sideways and who will be able to assist when push comes to shove.

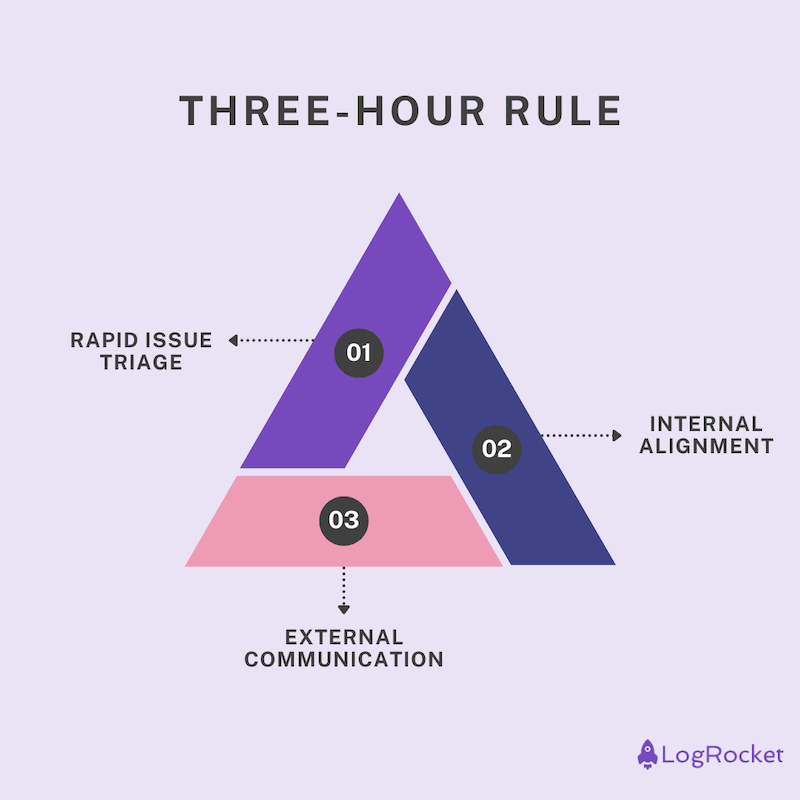

Follow the three-hour rule in crisis mode

When a crisis hits, the first few hours are critical. Panic and emotional reactions make things worse. A structured three-hour response window helps teams quickly contain issues without making rash, reputation-damaging decisions.

Here’s the breakdown of this method:

Hour 1: Rapid issue triage

During the initial 60 minutes, you, and more importantly, your team should focus on gathering facts.

What happened? Is it a bug, a breach, or a public relations issue? How many users are affected? Is this isolated or widespread? What’s the worst-case scenario? Could this cause revenue loss, compliance issues, or data leaks?

Only with facts can you properly assess the issue priority and move on to a fix. Just because a client or a panicked stakeholder tells you it’s a top priority, it doesn’t have to be the case. I’ve seen tons of “product not working” tickets, which turned out to be a simple misconfiguration on the user’s side of the web.

Due to such situations, don’t jump to making any public statements before verifying facts. A crisis is only one when you recognize it as such. Not anytime earlier.

Hour 2: Internal alignment

Engineering begins the analysis to determine the source of the issue. If needed, legal and compliance assess risks for security and regulatory issues (though that usually happens long after an issue was fixed, and such an assessment is even needed). Customer support drafts holding responses for incoming complaints. You, or whoever is responsible for comms, make sure stakeholders know what is happening.

At this stage, avoid premature promises about fixes before engineering confirms feasibility. Don’t let executives pressure teams into rushed public responses. A late update is always better than an inaccurate one.

Hour 3: External communication

If the crisis is severe enough to last three or more hours, it’ll require you to notify your users about the situation. This is for your own benefit: If users know you are on top of an issue, they’ll be less likely to bombard customer support, leaving less clean-up work once the dust is settled.

Be transparent, but don’t overshare. Users want acknowledgment and reassurance, not a technical deep dive. Own the issue rather than deflecting.

Just like in stakeholder comms, blaming third-party services or “unexpected bugs” makes your team look incompetent. Give a clear next step. Will there be a follow-up update? When should users expect more information?

Here’s an example response:

“Hello,

We’re aware of an issue affecting [feature/service]. Our team is actively investigating, and we’ll provide an update within the next [timeframe]. We appreciate your patience as we work to resolve this.”

As you can see, every crisis requires not only a structured approach, but also massive focus and human resources.

How to tell a real crisis from a fake one

While some of you may feel like all you do is run every day putting out fires, not every fire is worth grabbing the extinguisher for. One of the most overlooked skills in crisis management is simply this: knowing whether what you’re looking at is a crisis, or just a loud inconvenience dressed like one.

If you treat every Slack ping, support ticket, or stakeholder freakout as a five-alarm emergency, you’ll exhaust your team, lose credibility, and ultimately stop recognizing the truly dangerous signals when they come.

On the other hand, if you downplay an actual crisis, the fallout can be far worse. In other words: A boy who cried (tech) wolf…

So, how do you tell the difference?

It starts with impact. A true crisis has meaningful consequences on one or more of the following dimensions:

- User experience — Are users unable to use a critical feature? Are sign ups broken? Is there data loss?

- Revenue — Is this blocking sales or causing churn? Could it lead to refunds, penalties, or missed targets?

- Legal and compliance — Does this touch on personal data, regulatory reporting, or accessibility standards?

- Reputation — Is this incident visible externally and potentially damaging your brand?

If none of these boxes are checked, you’re probably not in a real crisis. It may still be important, but it can be addressed through normal processes, not panic-mode protocols.

Next, check the reliability of the source. Is this issue backed by telemetry, logs, and multiple user reports? Or is it based on a single vague screenshot from an angry client? Don’t ignore early warnings, but verify them before mobilizing your entire team.

I’ve seen engineers lose half a day over what turned out to be a browser extension bug on a customer’s side. Noise like this is common, your job is to filter the signal from it.

Then comes pattern recognition. The more experienced you are, the faster you can triage. Some symptoms look bad but are familiar and reversible. Others may seem minor but hint at deep architectural flaws.

Build a playbook from past incidents. What instincts have you developed over time? It’s just a mental database of false alarms and near-misses turned learnings.

Finally, emotions aren’t evidence. Just because a stakeholder yells “CRITICAL” doesn’t mean it is. Set criteria. Make them visible.

Align with leadership on what qualifies as P0, P1, and P2. This protects you from being swayed by whoever’s loudest on the call and ensures your team stays focused on what really matters.

Final thoughts

Great product management isn’t just about building features. It’s about handling chaos when things go wrong. The best PMs stay calm under pressure, communicate effectively, and lead their teams through uncertainty. You don’t have to be perfect. You just need to be prepared.

So the next time a crisis hits, don’t panic. Follow the plan. Own the problem. Keep your users informed. And most importantly, learn from it.

By implementing crisis management strategies, you can transform potential disasters into opportunities to build stronger user trust and demonstrate your team’s resilience. Plus, once your team goes through a crisis in a civilized and structured way, future issues won’t be as scary.

Whether you’re dealing with technical failures or communication challenges, having a structured approach ensures you can respond effectively when it matters most. Speaking of such approaches, make sure to check this space regularly for new (hopefully) insightful product management articles. See you next time!

Featured image source: IconScout

The post A PM’s guide to handling product crises like a pro appeared first on LogRocket Blog.

This post first appeared on Read More