Using Grok 4 in the frontend development: Here’s what I’ve learned

Over a month ago, Grok 4 launched, smashing through nearly every benchmark and earning a bold reputation for being“more intelligent than any math professor.” It’s a huge claim but has it actually lived up to the hype?

Goals

The aim here is simple: cut through the noise and give frontend developers a straight answer. Does this so-called “mathprofessor-level” AI genuinely make you a better, faster developer, or is it just another overpromised tool?

Through hands-on testing with real React components, CSS challenges, and debugging scenarios, this article breaks down where Grok 4 truly shines in a frontend workflow, and where you’re better off sticking with your current stack.

What is Grok 4?

If you haven’t heard of Grok 4, it is simple xAI’s latest flagship AI model which was launched on July 10, 2025, with Elon Musk calling it “the most intelligent model in the world, and sincerely before GPT5 was pushed ot was really the most intelligent model on benchmarks:

It was trained using Colossus, xAI’s 200,000 GPU cluster, with reinforcement learning training that refines reasoning abilities at pretraining scale.

Grok 4’s key features

Below are the four standout features that set Grok 4 apart:

Native tool integration & real-time search

- Includes native tool use and real-time search integration

- Trained to use powerful tools to find information from deep within X, with advanced keyword and semantic search tools

- Can view media to improve answer quality

Multi-agent architecture (Grok 4 Heavy)

- Grok 4 Heavy is a “multi-agent version” that spawns multiple agents to work on problems simultaneously, comparing their work “like a study group” to find the best answer

- Available through $300/month SuperGrok Heavy subscription

Multimodal capabilities

- Text, image analysis, and voice support

- Uses Aurora, a text-to-image model developed by xAI, to generate images from natural language descriptions

Pricing & access

One of Grok 4’s downsides is its pricing model, 50% increase over every other AI model is indeed less impressive for developers, but its API access price is averagely, fair. Here is it price range:

- Standard Grok 4 – $30/month

- SuperGrok Heavy – $300/month (access to Grok 4 Heavy)

- API access – $3/$15 per 1M input/output tokens

- Available via X, Grok apps (iOS/Android), and API

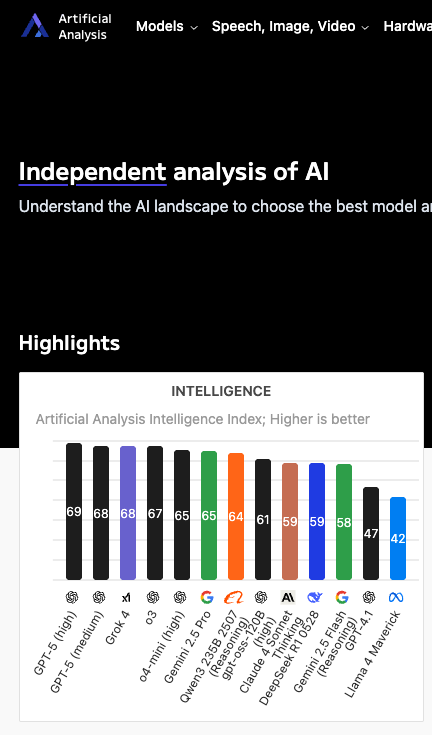

General benchmark results

Grok AI models haven’t been celebrated for their coding abilities previously, let’s see what the benchmarks are saying recently:

Overall intelligence rankings

| Benchmark | Grok 4 Score | Previous Leader | Previous Score | Source |

|---|---|---|---|---|

| Artificial analysis intelligence index | 73 | OpenAI o3, Gemini 2.5 Pro | 70 | Artificial Analysis |

| LMArena overall | #3 | – | – | LMArena.ai |

Mathematics & reasoning leadership

| Benchmark | Grok 4 Score | Achievement | Previous Record | Source |

|---|---|---|---|---|

| Artificial analysis math index | Leader | Combines AIME24 & MATH-500 | – | Artificial analysis |

| AIME 2024 | 94% | Joint highest score | – | Artificial analysis |

| LMArena math category | #1 | First place | – | LMArena.ai |

| Humanity’s last exam | 24% | All-time high (text-only) | Gemini 2.5 Pro – 21% | Artificial analysis |

| GPQA diamond | 88% | All-time high | Gemini 2.5 Pro – 84% | Artificial analysis |

Abstract reasoning dominance

| Benchmark | Grok 4 Score | Achievement | Previous Best | Source |

|---|---|---|---|---|

| ARC-AGI-2 | 16.2% | Nearly double next best | Claude Opus 4 – ~8% | ARC prize foundation |

| ARC-AGI-1 | Top performer | Leading publicly available model | – | ARC prize foundation |

Coding performance

| Benchmark | Grok 4 Performance | Ranking | Source |

|---|---|---|---|

| Artificial analysis coding index | Leader | #1 (LiveCodeBench & SciCode) | Artificial analysis |

| LMArena coding category | Strong | #2 | LMArena.ai |

Advanced capabilities

| Benchmark | Grok 4 Achievement | Significance | Source |

|---|---|---|---|

| Vending-bench | Top performance | Tool use & agent behavior | Multiple benchmarks |

| MMLU-Pro | 87% | Joint highest score | Artificial analysis |

| Grok 4 Heavy on HLE | 44.4% | With tools (vs 25.4% without) | xAI internal |

Grok 4 clearly dominates academic benchmarks and mathematical reasoning, but the gap between test results and real-world usability raises a question: does this “math professor-level intelligence” hold up in everyday frontend work? Let’s put it to the test and see how well it actually performs.

Getting started with Grok 4 in the frontend

You could opt for the chat interface:

Or we could integrate Grok’s API in a CLI, get your API key from OpenRouter.

How to integrate Grok in your frontend workflow

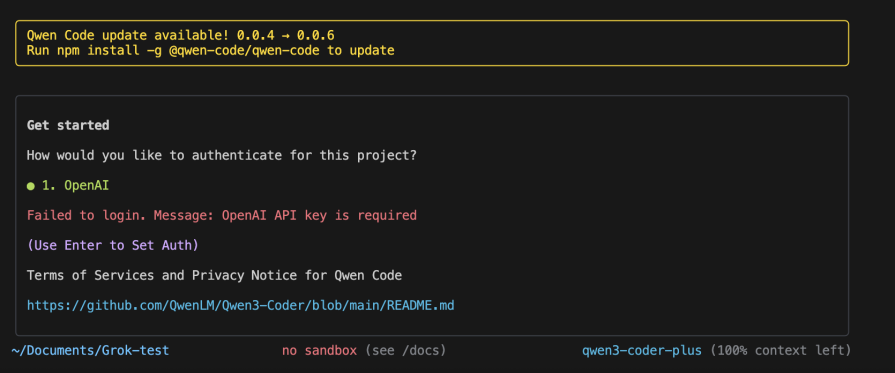

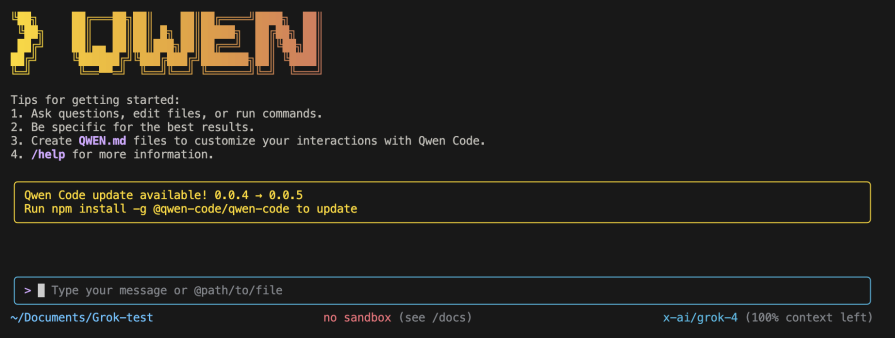

We will need a good open-source CLI that can accept Grok’s API keys. Gemini CLI would be our first pick, except for the fact that it uses Gemini 2.5 Pro behind the scenes with no room for changing models. However, there is a Gemini CLI fork called Qwen CLI that is compatible with Grok’s API.

To install Qwen CLI, run this in your terminal:

npm install -g qwen-cli

Then navigate to a project directory and run:

qwen

This initializes the CLI in your current project. Now we’ll configure it to use OpenRouter’s API endpoint to access Grok 4 for testing. After running qwen you should see this:

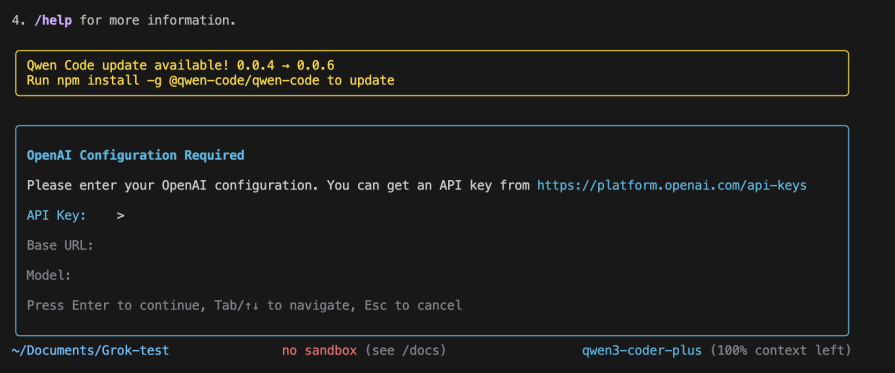

After selecting OpenAI, you should see this:

Fill in the Grok 4 API keys below:

- API key –

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 - Base URL –

https://openrouter.ai/api/v1 - Model –

x-ai/grok-4

When you have filled in those keys, at the bottom left of your terminal you should see x-ai/grok-4 has replaced the previous qwen3-coder-plus AI, as shown below. This confirms that your CLI is now connected to Grok 4 through OpenRouter.

Verification

- Terminal status should display –

Model: x-ai/grok-4 - Connection indicator shows OpenRouter endpoint is active

- You’re now ready to test Grok 4’s frontend development capabilities

My favourite test for the frontend will always be a Svelte 5 application. I have used this test for Claude sonnet-4, Qwen-3-code, and kimi-k2, and only Claude and kimi have gotten it on the first try. Let’s see how Grok-4 performs with this test:

“Create a complete todo application using Svelte 5 and Firebase, with custom SVG icons and smooth animations throughout.”

We will give Grok the environmental variables :

env VITE_FIREBASE_API_KEY=AIzaSy*************************** VITE_FIREBASE_AUTH_DOMAIN=svelte-todo-*****.firebaseapp.com VITE_FIREBASE_PROJECT_ID=svelte-todo-***** VITE_FIREBASE_STORAGE_BUCKET=svelte-todo-*****.firebasestorage.app VITE_FIREBASE_MESSAGING_SENDER_ID=99734***** VITE_FIREBASE_APP_ID=1:99734*****:web:0e2fd85cb9ba95cab92409 VITE_FIREBASE_MEASUREMENT_ID=G-WKM3FE****

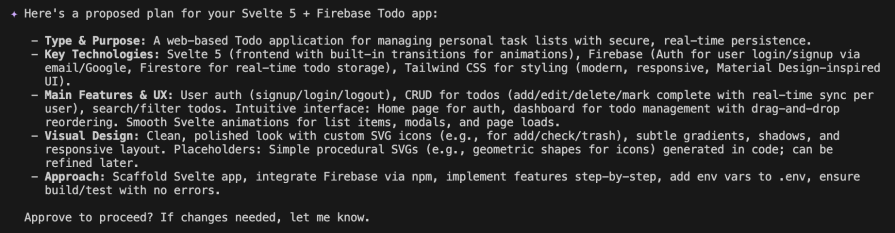

The first thing Grok did was suggest a solid plan:

I ran out of credits. Grok 4 used over a dollar and still wasn’t able to do that, kimi did it in way less than that:

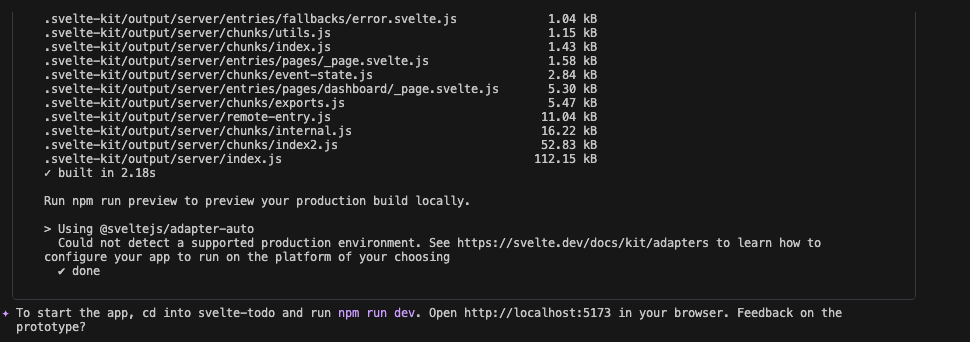

I had to quickly top it up, hopefully, we get it done this time. I think we are ready:

Analyzing the results

On first try

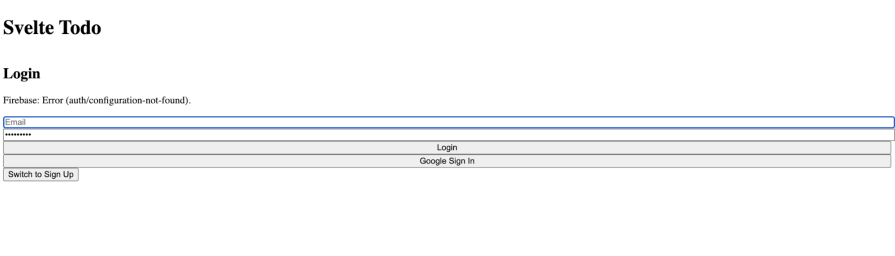

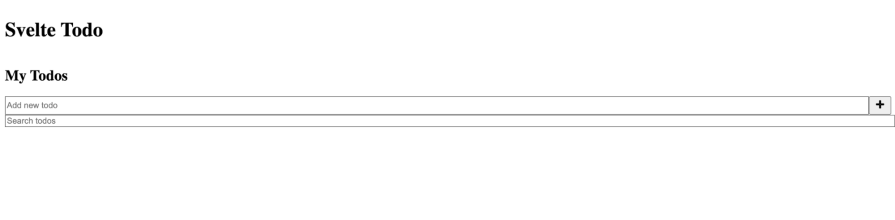

When I opened the localhost. I saw an authentication page:

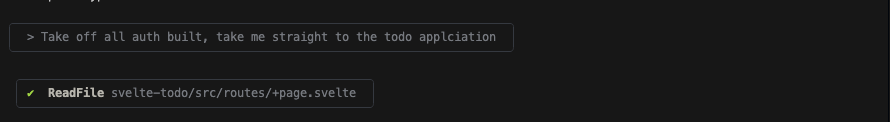

I didn’t ask it to handle authentication because I didn’t provide those variables, so I’ll kindly request that it skip all authentication steps in the build:

On second try

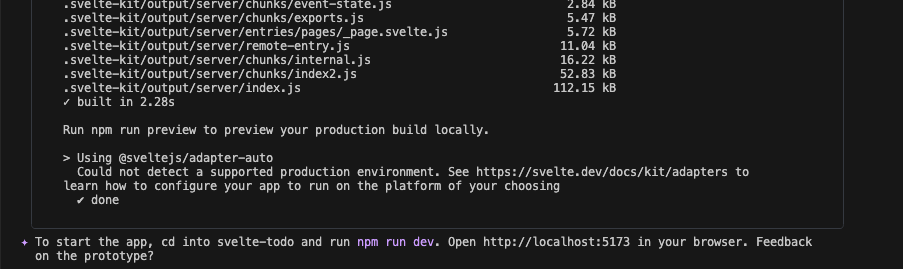

It said it fixed it:

Let’s run npm run dev again:

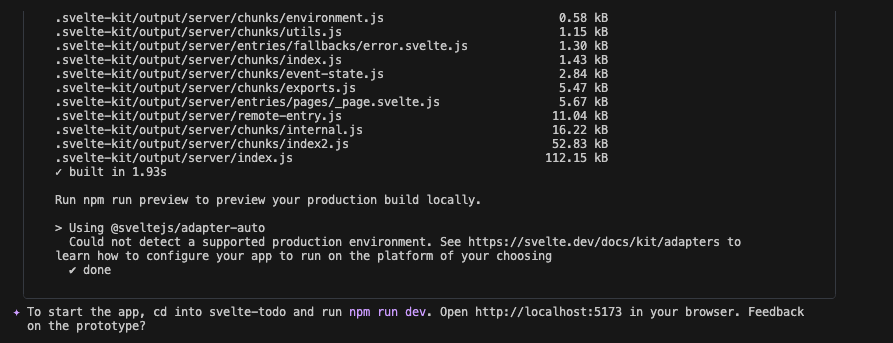

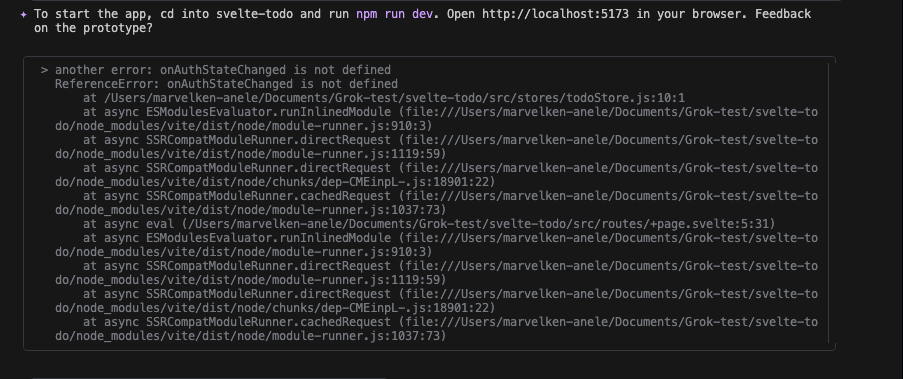

An error. We can just copy this error and tell it to fix it:

On third try

We showed Grok 4 the error:

and it claimed to have solved it:

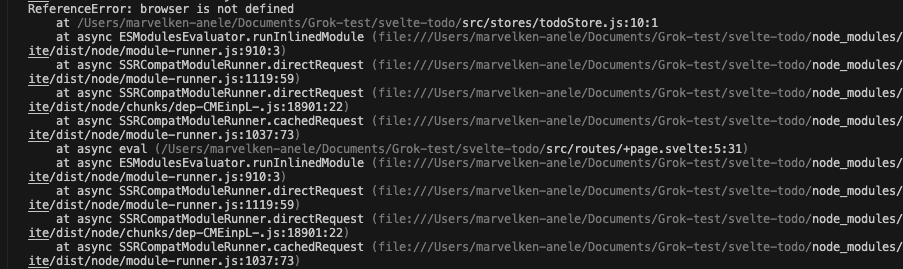

Let’s check it out:

Yet another error.

On fourth try

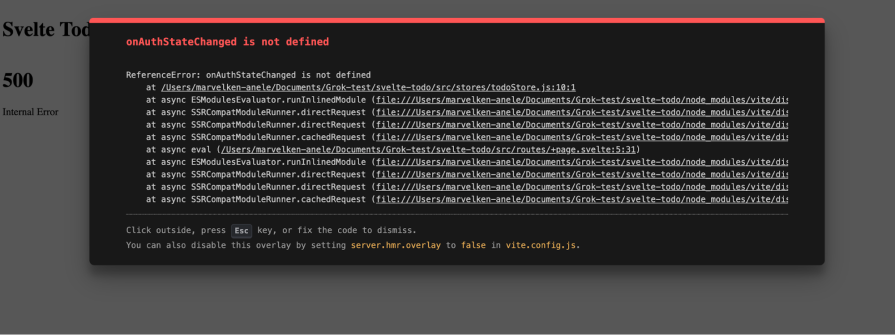

We returned the error as usual, and it got fixed, but this time there was a little problem:

It skipped the part where we wanted styles and animations. I went ahead to test it because my tokens were running out, and I discovered the application wasn’t even functional. This means you have to iterate multiple times until you get what you actually want.

Grok is expensive, and despite its not-perfect performance in frontend development, the high cost is a significant downside.

Final take

- 152 requests to get basic functionality working

- $3.31 spent on what should be a simple frontend task

- 1.45M input tokens + 54K output tokens – massive token consumption

Cost vs. Output analysis

Token efficiency comparison

Based on my tests across multiple AI models for the same Svelte 5 + Firebase todo app:

| Model | Requests | Input Tokens | Output Tokens | Total Cost | Success Rate |

|---|---|---|---|---|---|

| Grok 4 | 152 | 1.45M | 54K | $3.31 | Partial |

| Kimi-k2 | 69 | 705,639 | 15,891 | ~$0.471 | Complete |

| Queen-3-coder | 47 | 536,344 | 12,015 | ~$0.228 | Complete |

| Claude Sonnet – 4 | 60 | 6,700 | 7,800 | $0.30 | Complete |

Grok 4’s pricing model assumes its intelligence will most probably justify the premium price, but for frontend work, you’re actually paying 10x more for noticeably worse results. The same computational power that dominates AIME math problems turns into an overpriced luxury when applied to frontend builds.

Web development benchmark

Source: WebDev Arena (web.lmarena.ai) – Live human evaluations:

| Rank | Model | Arena Score | Provider | Votes |

|---|---|---|---|---|

| #1 | Claude 3.5 Sonnet | 1,239.33 | Anthropic | 25,309 |

| #2 | Gemini-Exp-1206 | ~1,220 | ~15,000 | |

| #3 | GPT-4o | ~1,200 | OpenAI | ~18,000 |

| #4 | DeepSeek-R1 | 1,198.91 | DeepSeek | 3,760 |

| #12 |

While Grok 4 crushes theoretical benchmarks, it doesn’t dominate when building actual components, CSS layouts, and JavaScript functionality. The “math professor-level” intelligence doesn’t translate to the very best frontend development experience.

TL;DR for frontend devs

Here’s how I’d recommend using Grok 4:

| Grok 4 | Recommendation |

|---|---|

| Best for | Algorithmic challenges, backend-heavy features |

| Not ideal for | UI builds, animations, CSS |

| Use instead for frontend | Claude Sonnet, Gemini, or Kimi K2 |

Conclusion

Grok 4 shines in technical, math-heavy backend work and will obliterate your LeetCode problems or complex algorithms. But when it comes to frontend, it falls short, struggling with basic UI tasks and lacking the polish needed for visual, user-facing interfaces. It’s a computational powerhouse, just not a frontend specialist.

The post Using Grok 4 in the frontend development: Here’s what I’ve learned appeared first on LogRocket Blog.

This post first appeared on Read More