Qwen3-Coder: Is this Agentic CLI smarter than senior devs?

Introduction

Qwen, Alibaba’s model family, just dropped its most ambitious coding model yet: Qwen3-Coder-480B-A35B-Instruct. Behind the long name is a 480-billion parameter tool trained on over 7 trillion tokens, with a 70% code ratio. This isn’t just another autocomplete engine — it’s designed to code, reason, and adapt.

Think of it as compressing the programming knowledge of thousands of bootcamp graduates into a single tool. It has knowledge that comes from building projects, not just reading documentation.

Qwen3-Coder is open source and technically self-hostable. But before you get excited, self-hosting isn’t cheap. Running it requires $50K+ in GPUs, not including power costs. For most developers, that means sticking with hosted options or API-based testing.

Goals

If you want to try Qwen3-Coder, you can experiment with it here. But remember: good tools still need good developers behind them. My goal here is to show how to use Qwen effectively to improve accuracy, cut down on repetitive tasks, and understand where it fits in a real workflow.

In this article, we’ll look at Qwen’s coding CLI, cover its benefits and use cases, and run some tests. Let’s see how it performs.

What Makes It Different

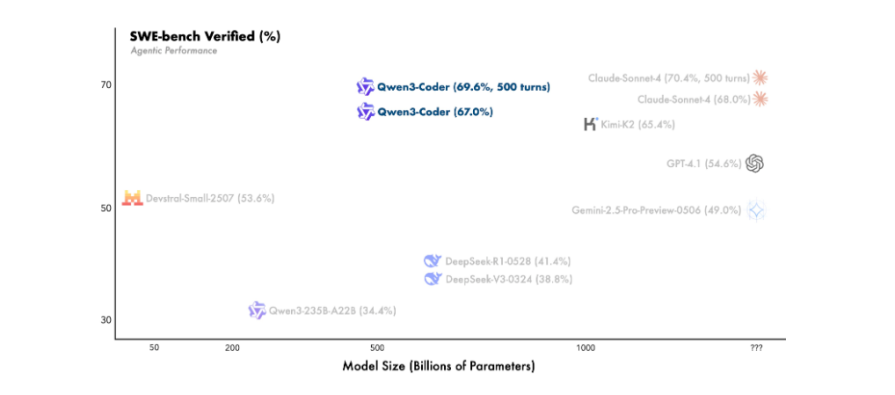

Unlike “smart autocomplete” tools, Qwen3-Coder takes on agentic tasks — browsing the web, using tools, and tackling projects end to end. Benchmarks claim it performs on par with Claude Sonnet 4, which is impressive for an open model.

It also supports 256K tokens natively and can extend to 1M tokens. That’s enough to load entire codebases into context.

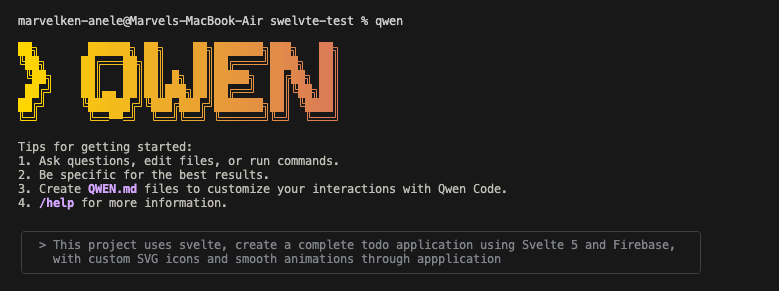

Qwen Code CLI

Qwen Code is the CLI built around this model. It’s essentially a fork of Google’s Gemini CLI, tuned to Qwen’s strengths — and it’s free. No more copy-pasting between your IDE and a chatbot; Qwen lives where you actually work: the terminal.

Benefits of Qwen3-Coder

- Massive context window: 256K tokens by default, expandable to 1M with YaRN.

- Agentic capabilities: Plans, iterates, and runs multi-step coding tasks. State-of-the-art results on coding, browsing, and tool use.

- Real-world training: Trained on 7.5T tokens with reinforcement learning from engineering scenarios.

- Tool integration: Works with your existing workflow instead of replacing it.

- OpenAI API compatibility: Supports providers via the OpenAI SDK format.

Use cases

- IDE integration: Use it in editors like Windsurf for pair programming.

- Chat interface: Query it directly via API for reviews or debugging.

- Cline integration: Configure with Cline for VS Code.

- Command line companion: Run Qwen in your terminal for quick, repeatable tasks.

Testing Qwen3-Coder

We tested Qwen3-Coder using its CLI, where most real dev work happens.

Prerequisites:

curl -qL https://www.npmjs.com/install.sh | sh

Install Qwen Code:

npm i -g @qwen-code/qwen-code

git clone https://github.com/QwenLM/qwen-code.git cd qwen-code && npm install && npm install -g

API Configuration:

export OPENAI_API_KEY="your_api_key_here" export OPENAI_BASE_URL="https://dashscope-intl.aliyuncs.com/compatible-mode/v1" export OPENAI_MODEL="qwen/qwen3-coder"

(Tip: Save these in a .env file so you don’t need to re-export.)

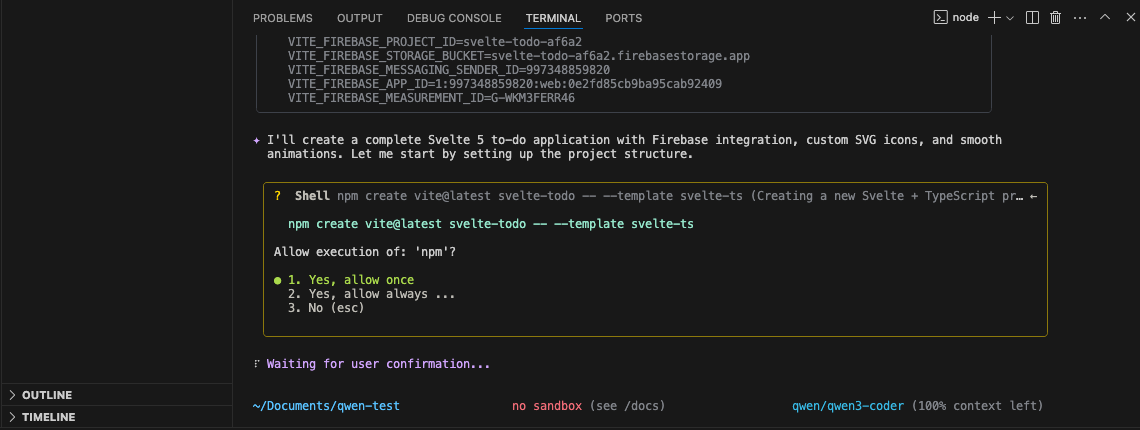

The first test: Svelte 5 + Firebase Todo App

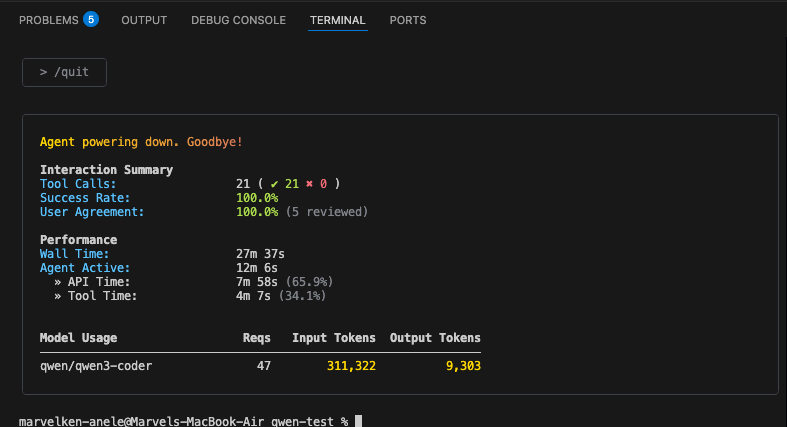

Observations: Qwen3-Coder performance analysis

Let’s break down what these results actually tell us about Qwen3-Coder’s strengths — and where it still struggles.

The good:

- 100% success rate on tool calls — when Qwen3-Coder decides to use a tool or run a command, it nails it.

- Complete user agreement — 4/4 reviewers said the final output met expectations.

- Token efficiency — just 2,584 output tokens for 212,692 input tokens; it’s not overly verbose or wasteful.

The reality check:

- 21+ minutes total time for a todo app is long, even if only ~5 minutes was actual AI compute time.

- Three iterations required — pretty typical of AI coding today, but it shows it rarely gets complex builds right the first time.

- 37 separate requests — lots of back-and-forth needed to get the final working app.

What does this reveal about AI coding?

The three-iteration pattern is actually pretty typical for complex coding tasks with current AI. It usually breaks down like:

The second test: Recreating X’s UI

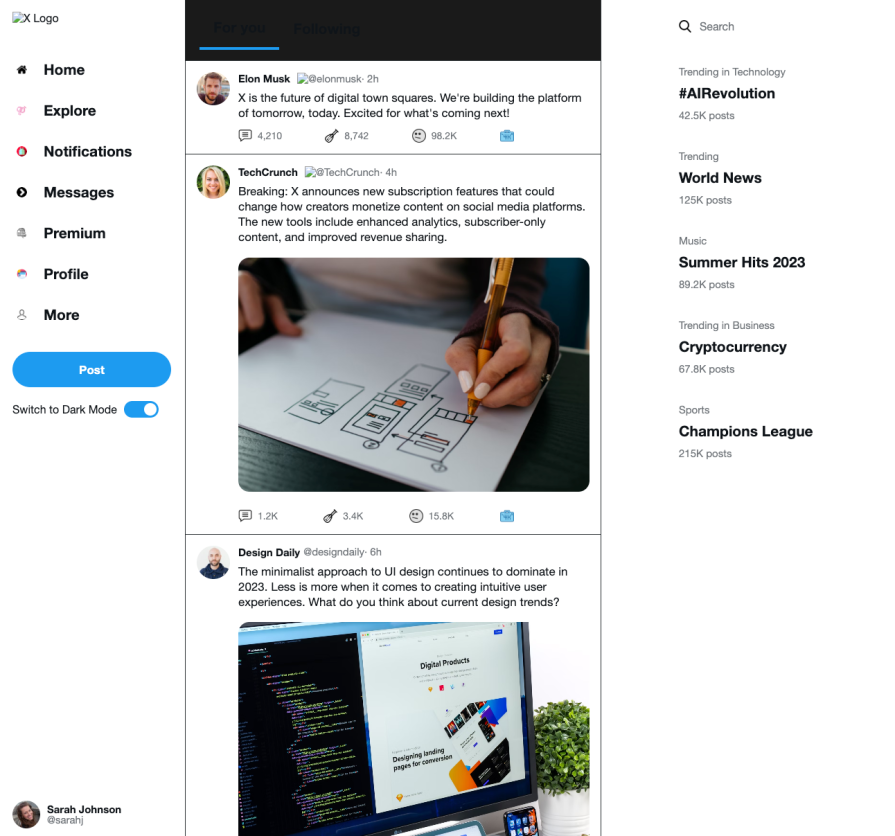

Next, we asked Qwen to rebuild X’s (formerly Twitter) homepage with HTML, CSS, and JS. I performed this same test while testing out the UI capabilities of Gemni-2.5-pro when it first came out, and it was excellent. Let’s see how Qwen scales this in comparison. Here’s the prompt: In one HTML file, recreate the X home page on desktop. Look up X to see what it recently looks like, put in real images everywhere an image is needed, and add a toggle functionality for themes.

Here it is in light mode:

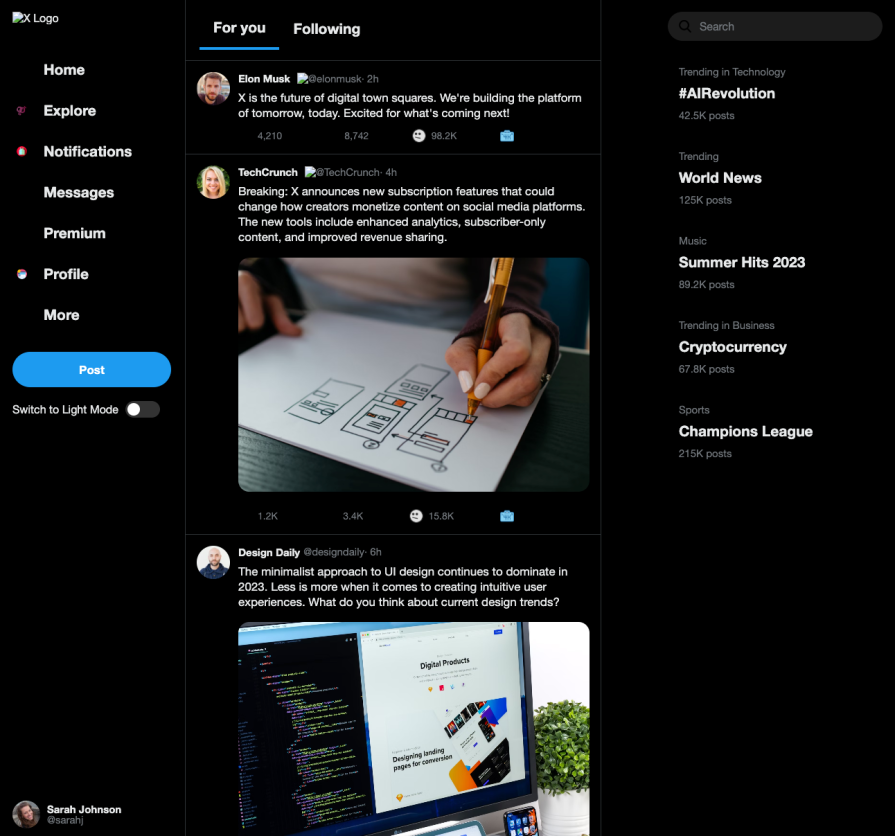

And in dark mode:

The results were functional, with light/dark mode included. While not perfect, it produced a working draft with real images and toggle support after a few refinements.

Conclusion

Qwen3-Coder isn’t outperforming Claude Code yet, but it’s close. The biggest draw is that Qwen is far more cost-effective. As a Gemini CLI fork, its biggest strength is accessibility. It’s an open, flexible option that can integrate into real workflows.

Expect some iteration and patience, but the fact that Qwen3-Coder handled Svelte 5 and Firebase shows promise. Sometimes in AI, timing and accessibility matter more than perfection, and Qwen may have nailed both.

The post Qwen3-Coder: Is this Agentic CLI smarter than senior devs? appeared first on LogRocket Blog.

This post first appeared on Read More