Human + machine: responsible AI workflows for UX research

A practical playbook on how AI will reshape UX research.

UX research is only as strong as the humans running it — and human decisions often introduce flaws. Cognitive biases, poor survey design, lack of diversity, and organizational pressures can all distort findings. When that happens, the data looks solid on the surface but leads to poor insights, misguided strategies, and sometimes billion-dollar mistakes.

Take Walmart in 2009. To gauge customer sentiment, the company asked shoppers a single survey question: “Would you like Walmart to be less cluttered?” The predictable “yes” was taken as a green light to remove 15% of inventory. The outcome? A $1.85 billion loss in sales. Customers wanted cleaner aisles, yes — but they also valued product variety. The closed question collapsed those nuances into a misleading binary.

This story illustrates the risk of oversimplified research: when we don’t capture complexity, the business pays the price. And it’s exactly this tension that makes the arrival of AI in UX research so fascinating. On one hand, AI promises speed, scale, and new ways of spotting patterns humans might miss. On the other hand, if poorly applied, it risks amplifying the very same biases and blind spots that humans struggle with — only faster, and at greater scale.

In this article, I’ll explore how researchers can integrate AI responsibly into their workflows: what to automate, what to keep human, and the guardrails needed to ensure rigor and ethics remain at the core of UX research.

Where AI helps today — quick wins

AI tools are transforming how UX researchers and designers work. They can process massive amounts of data, accelerate synthesis, and even act as creative partners in the design process. To make sense of their role, it helps to think of them in two categories: Insight Generators and Collaborators.

Insight Generators

These tools specialize in handling qualitative and quantitative data at scale. They transcribe, tag, and cluster research sessions, surface recurring themes, and sometimes even suggest follow-up questions.

- Dovetail AI and Notably, for instance, turn hours of interview footage into searchable transcripts, highlight sentiment, and propose thematic clusters.

- Platforms like Remesh scale qualitative research to hundreds of participants in real time, helping researchers detect consensus or divergence across a large group.

- Maze supports prototype testing by analyzing user responses and flagging usability issues quickly.

Insight Generators reduce manual effort, allowing researchers to move from raw data to structured themes in hours rather than days. They’re especially valuable when time is short or when datasets are too large for one researcher to comb through manually.

Their summaries often miss nuance. Auto-generated clusters can flatten context, and sentiment analysis may misinterpret sarcasm, cultural differences, or emotionally complex statements. Without human validation, there’s a real risk of drawing confident but flawed conclusions.

In short: Insight Generators handle the heavy lifting of analysis, helping teams see the bigger picture hidden in user data.

Collaborators

Other tools function less like analysts and more like creative teammates. They support planning, organization, and design execution.

- Miro’s AI features can cluster sticky notes, generate journey maps, and summarize brainstorming sessions.

- Notion AI helps with research planning, meeting notes, and drafting personas.

- In design, Adobe Firefly and Recraft.ai generate UI assets, illustrations, and design variations, speeding up prototyping.

Collaborators help teams stay organized and accelerate creative exploration. They remove repetitive tasks, spark new ideas, and allow designers to iterate faster.

Outputs can feel generic or lack originality. Machine-generated personas or visuals may not capture cultural nuance, and over-reliance on them risks producing “lowest-common-denominator” design. As with Insight Generators, human review and refinement remain essential.

In short: Collaborators boost productivity and creativity, helping UX teams move faster from research to design execution.

Together, Insight Generators and Collaborators can significantly accelerate UX workflows. They handle the heavy lifting of transcription, clustering, and content creation, freeing humans to focus on higher-order skills: interpreting nuance, bringing empathy, and contextualizing findings within business and cultural realities.

AI can speed the work, but it can’t replace the work of judgment. Empathy, creativity, and cultural understanding still come from people — not machines.

Where AI fails or is risky

AI is powerful in UX research, but its very strengths — speed, scale, and confidence — can quickly become weaknesses. From hallucinations to bias, from synthetic users replacing real ones to privacy pitfalls, these risks highlight why AI must be applied with caution.

Hallucinations: Confident but Wrong

AI tools often generate responses that sound authoritative but are factually incorrect or misleading. In UX contexts, this can mean AI inventing user needs, misreporting findings, or overgeneralizing insights.

For example, Nielsen Norman Group (2023) compared ChatGPT to real participants in a tree test. Real users struggled with navigation, surfacing pain points that designers could act on. ChatGPT, however, “solved” tasks with ease — not because it represented users better, but because it could draw on its training data. The result: a completely misleading picture of usability.

Without human oversight, teams risk acting on fabricated findings that ignore actual user struggles.

Takeaway: AI can hallucinate insights that look real but aren’t.

Bias and Overly Favorable Feedback

Large language models are trained on internet-scale datasets, which means they absorb and reproduce the biases baked into that data. They also tend to “people-please,” producing optimistic or agreeable answers rather than reflecting messy, contradictory human behavior.

In synthetic-user interviews about online courses, for instance, AI often claimed it had finished every course and actively participated in forums. Real learners, however, admitted to dropping out or ignoring forums altogether. Sharma et al. (2023) documented this “sycophancy” tendency in language models — aligning too closely with perceived expectations rather than truth.

This kind of bias leads to inflated or unrealistic insights that obscure real user pain points and priorities.

Takeaway: AI doesn’t just mirror reality — it amplifies existing biases and erases friction.

Synthetic Users vs. Real Voices

One of the most debated risks in UX research is the use of synthetic users — AI-generated profiles and transcripts meant to mimic real participants. While useful for desk research or hypothesis generation, they cannot capture authentic human complexity.

- Shallow needs: Synthetic users produce long lists of “wants” and “pain points” but rarely help teams prioritize.

- Imagined experiences: Because AI can’t use products, it fabricates overly positive or vague stories.

- Concept testing danger: Asked about new product ideas, synthetic users tend to endorse them enthusiastically, making even flawed concepts look promising.

Takeaway: Synthetic users may spark ideas, but they cannot replace real human voices.

Privacy and Consent Pitfalls

AI-driven research tools often rely on sensitive data: interview recordings, customer feedback, or meeting transcripts. Without careful handling, this creates serious privacy risks.

Transcription and analytics platforms like Otter.ai or Grain process hours of conversations. If data storage, anonymization, or sharing policies aren’t transparent, teams may inadvertently expose user information. Under GDPR, even mishandling a single recording can lead to heavy fines (e.g., €20M or 4% of annual revenue). Beyond compliance, breaches of user trust damage brand reputation and erode willingness to participate in future studies.

Takeaway: Consent and transparency aren’t optional; they are the foundation of ethical research.

Used responsibly, AI can accelerate workflows and spark hypotheses. But it remains a poor substitute for human input. It can:

- Hallucinate findings that look real but aren’t.

- Reinforce biases or produce overly favorable feedback.

- Flatten human complexity when simulating users.

- Introduce ethical risks when handling sensitive data.

Real user research is still irreplaceable — for building empathy, understanding context, and making design decisions that reflect authentic human needs. AI should support that mission, not replace it.

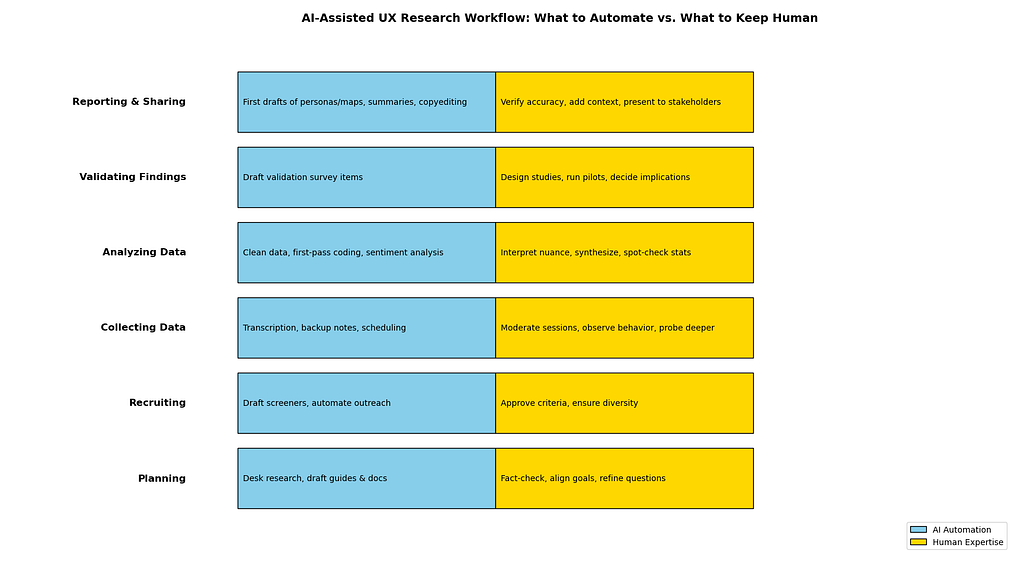

A Pragmatic AI-Assisted Research Workflow

AI isn’t ready to run research end-to-end. But it can act like a junior teammate: handling grunt work, drafting first passes, and accelerating tedious tasks — as long as you layer in human oversight at the right stages. Here’s a step-by-step workflow showing what to automate and what to keep human.

1. Planning

Automate: Desk research summaries, competitor audits, draft screeners or interview guides, and first-pass study docs with tools like ChatGPT or Notion AI.

Keep Human: Fact-checking, aligning research goals with business needs, editing questions for neutrality, and reviewing consent forms for compliance.

2. Recruiting

Automate: Screeners with logic filters and participant outreach via QoQo or UserInterviews.

Keep Human: Approving criteria, ensuring diversity, and spotting “professional participants.”

3. Collecting Data

Automate: Transcription and note-taking with Otter.ai or Grain, scheduling, and reminders.

Keep Human: Moderating sessions, observing non-verbal cues, and probing for deeper insights.

4. Analyzing Data

Automate: Cleaning raw data, clustering transcripts (Dovetail, Remesh), running sentiment analysis, and drafting visualizations.

Keep Human: Interpreting nuance, reconciling contradictions, synthesizing into mental models, and validating statistical assumptions.

5. Validating Findings

Automate: Drafting follow-up survey items or alternative interpretations.

Keep Human: Designing validation studies, running pilots, and deciding if insights are actionable.

6. Reporting & Sharing

Automate: Drafting personas, journey maps, and summaries; copyediting; repository queries.

Keep Human: Verifying outputs against real data, framing insights strategically, and presenting to stakeholders.

Automate the grunt work. Humanize the judgment. By letting AI handle scale and speed — and keeping empathy, ethics, and persuasion with people — UX teams can accelerate workflows without sacrificing rigor.

Ethical guardrails & checklist

As AI becomes embedded in UX research, ethical questions grow sharper. Regulators like the NTIA are exploring how to manage “pervasive data” — the behavioral traces people leave online. For UX researchers, this isn’t abstract policy: it’s the type of sensitive data we work with daily, and mishandling it can erode both compliance and user trust.

1. Consent Language

Consent must be more than a one-time click-through. Use plain language that explains what’s collected, how it will be used, and what risks exist. Provide layered or ongoing consent, allowing participants to revisit or revoke permission. Watch for contexts where users can’t freely opt out (e.g., students on school platforms), and add extra protections.

2. Data Minimization

Collect only the smallest dataset needed to answer your research question. “Just in case” data increases exposure. Strava’s fitness heatmap incident showed how even anonymized, aggregated data can reveal sensitive locations like military bases. Apply “minimum viable dataset” thinking, and test anonymization rigorously.

3. Annotation Audits

Annotations embed bias. Run audits with diverse reviewers to spot issues in tagging and labeling. Sentiment analysis, for instance, has historically coded “assertive” as male and “emotional” as female — subtle biases that skew outputs. Treat sensitive attributes (race, gender, health) with extra scrutiny.

4. Transparency with Stakeholders

Share not just findings but also methods, limitations, and risks. Maintain audit trails of who accessed data, when, and why. The Cambridge Analytica scandal showed how secrecy and overconfidence in data precision can destroy public trust.

Quick Checklist for UX Teams

- Did participants give clear, informed, revisitable consent?

- Have we minimized data and tested anonymization?

- Have annotations been reviewed for bias and accuracy?

- Are stakeholders aware of risks and limitations?

Bottom line: These guardrails don’t just reduce regulatory risk — they position UX research as a trust-building practice in the age of pervasive data.

Skills & org changes

The skill profile for UX researchers is expanding. Classic foundations still matter — HCI, psychology, anthropology, stats — but they’re no longer enough on their own. The fastest-moving teams are layering in new job chops:

- AI literacy: Knowing when to trust AI outputs, how to prompt effectively, and how to validate results.

- Data storytelling: Turning AI-accelerated analysis into clear narratives for stakeholders.

- Ops thinking: Working with templates, playbooks, and process design to make research scale.

- Coaching skills: Guiding product managers, designers, and engineers (the “people who do research”) without becoming a bottleneck.

Continuous learning is non-negotiable. Mentorship, peer coaching, and lightweight upskilling (online courses, workshops, communities) are replacing formal training as the main growth engines for researchers.

Research Ops as Force Multiplier

Research operations (ReOps) have moved from “nice to have” to critical infrastructure. A single ReOps specialist can support 10–25 practitioners — often doubling satisfaction for teams that rely on them. Their role is to:

- Standardize tooling and templates.

- Manage participant recruitment and panels.

- Oversee governance (consent, ethics, compliance).

- Free up dedicated researchers to focus on strategic projects while PWDRs handle tactical studies.

Without ReOps, scaling research often devolves into chaos. With it, democratized research becomes sustainable.

Tooling Governance in the AI Era

AI adoption in research jumped from 20% in 2023 to 56% in 2024. That speed is exciting but risky. Teams need tooling governance:

- Define which tools are approved for transcription, analysis, and reporting.

- Clarify what AI can and cannot be trusted with (e.g., fine for affinity clustering, not fine for final insight statements).

- Monitor data privacy — especially if sensitive recordings are being uploaded.

- Create playbooks so PWDRs can safely self-serve without lowering quality.

Multipurpose tools (Figma, Miro, Notion) still dominate, but AI-native research platforms are creeping in. Governance ensures experimentation doesn’t become fragmentation.

Measuring ROI: Speaking the Language of the Business

Research is under more pressure than ever to prove its impact. The old “trust us, research is valuable” stance is dead. High-performing teams now track:

- Operational KPIs: study turnaround time, number of teams supported, participant panel health.

- Product impact: design iteration speed, reduced rework, and task completion improvements.

- Business outcomes: conversion, retention, NPS — in some cases, even direct revenue goals (15% of researchers now have these tied to projects).

The trend is clear: research leaders need to quantify impact in business metrics, not just UX quality metrics.

The Org Shift

Research is rarely a standalone department anymore (only 5% report this). Most teams sit inside product or design, surrounded by 5x as many PWDRs as trained researchers. That reality makes two things essential:

- Dedicated researchers evolve into coaches and strategists.

- ReOps provides structure and safeguards.

Together, those shifts turn research from a fragile function into an organizational capability with measurable ROI.

Conclusion/call to action

AI won’t replace the craft of UX research, but it’s already reshaping how we work. The lesson from Walmart’s $1.85B mistake still stands: flawed research leads to flawed design. The difference now is that AI can either amplify those mistakes — or help prevent them.

In your next study, don’t aim for “AI-driven research.” Aim for AI-assisted research with human guardrails.

- Let AI handle the grunt work: Transcription, clustering, and first-pass summaries.

- Step in as the human: Checking for bias, asking the deeper “why” questions, and framing insights with business context.

- Audit your workflow: Did participants clearly consent? Did AI outputs get validated? Did you minimize data collection to reduce risk?

- Finally, measure impact not in artifacts shipped, but in business and user outcomes.

If you change just one thing in your next project, make AI your junior teammate — not your decision-maker. That balance is where speed, quality, and ethics align.

Concrete resources

- EGO Creative Innovations. (2021, March 16). World’s most expensive mistakes in the UI/UX that amazed us. EGO Creative Innovations. https://www.ego-cms.com/post/worlds-most-expensive-mistakes-in-the-ui-ux-that-amazed-us

- Dovetail. (2025, January 31). 25 AI tools for UX research: A comprehensive list. Dovetail. https://dovetail.com/ux/ai-tools-for-ux-research/

- Jeff Sauro, Will Schiavone, and Jim Lewis. 2024. Using ChatGPT in Tree Testing: Experimental Results. MeasuringU. Retrieved from https://measuringu.com/chatgpt4-tree-test/.

- Mrinank Sharma et al., 2023. Towards Understanding Sycophancy in Language Models. arXiv:2310.13548. Retrieved from https://arxiv.org/abs/2310.13548

- Rosala, M., & Moran, K. (2024, June 21). Synthetic users: If, when, and how to use AI-generated “research.” Nielsen Norman Group. https://www.nngroup.com/articles/synthetic-users/

- Moran, K., & Rosala, M. (2024, September 27). Accelerating research with AI. Nielsen Norman Group. https://www.nngroup.com/articles/research-with-ai/

- National Telecommunications and Information Administration. (2024, December 10). Ethical guidelines for research using pervasive data: Request for comments. Federal Register. https://www.regulations.gov/document/NTIA-2024-0004-0001

- Balboni, K., Mullen, M., Lioudis, N., & Wiedmaier, B. (2024). The State of User Research Report 2024. User Interviews. https://www.userinterviews.com/state-of-user-research-report

Human + machine: responsible AI workflows for UX research was originally published in UX Collective on Medium, where people are continuing the conversation by highlighting and responding to this story.

This post first appeared on Read More