Building trust in opaque systems

Why the better AI gets at conversation, the worse we get at questioning it

How do we know when to trust what someone tells us? In person conversations give us many subtle cues we might pick up on, but when they happen with AI system designed to sound perfectly human, we lose any sort of frame of reference we may have.

With every new model, conversational AI sounds more and more genuinely intelligent and human-like, so much so that every day, millions of people chat with these systems as if talking to their most knowledgeable friend.

From a design perspective, they’re very successful in the way they feel natural, authoritative and even empathetic, but this very naturalness becomes problematic as it makes it hard to distinguish when outputs are true or simply just plausible.

This creates exactly the setup for misplaced trust: trust works best when paired with critical thinking, but the more we rely on these systems, the worse we get at it, ending up in this odd feedback loop that’s surprisingly difficult to escape.

The illusion of understanding

Traditional software is straightforward — click this button, get that result. AI systems are something else entirely because they’re unpredictable as they can make new decisions based on their training data. If we ask the same question twice we might get completely different wording, reasoning, or even different conclusions each time.

How this thing thinks and speaks in such human ways, feels like magic to many users. Without understanding what’s happening under the hood, it’s easy to miss that those “magical” sentences are ‘simply’ the most statistically probable chain of words, making these systems something closer to a ‘glorified Magic 8 Ball’.

Back in 2022 when ChatGPT opened to public, I was also admittedly mesmerised by it, and after it proved useful in a couple of real-world situations, I started reaching for it more and more, even for simple questions and tasks.

Until one day I was struggling with a presentation segment that felt flat compared to the rest and asked Claude for ideas on how to make it more compelling. We came up with a story I could reference, one I was already familiar with, but there was this one detail that felt oddly specific, so I asked for the source.

You can imagine my surprise when Claude casually mentioned it had essentially fabricated that detail for emphasis.

How I could have so easily accepted that made-up information¹ genuinely unsettled me and became the catalyst for me to really try and understand what I was playing with. What I didn’t know at the time was that this behaviour represents exactly what these systems are designed to do: generate responses that sound right, regardless if they’re actually true or not.

Human-like, but not human

The core problem when it comes to building trust in AI is that the end goal of these systems (utility) works directly against the transparency needed to establish genuine trust.

To maximise usefulness, AI needs to feel seamless and natural — nobody wants to talk to a robot, its assistance should be almost invisible. We wouldn’t consciously worry about the physics of speech during conversation, so why should we think about AI mechanics? We ask a question, we get an answer.

But healthy scepticism requires transparency, which inevitably introduces friction. We should pause, question, verify, and think critically about the information we receive. We should treat these systems as the sophisticated tools they are rather than all-knowing beings.

The biggest players seem to be solving for trust by leaning into illusion rather than transparency.

One key technique is anthropomorphising the interface through language choices. For example, the many “thinking” indicators that appear while actually just preparing a response, it’s a deliberate attempt at building trust. This works brilliantly because these human-like touches make users feel connected and understood.

However, giving AI qualities like these thinking indicators, conversational tone, personality, and “empathy” creates two subtle yet critical problems:

#1

Giving AI human-like qualities, makes us lose the uncertainty signals that would normally help us detect when something is off. Humans naturally show knowing what they don’t know through hesitation, qualifying statements (like “I think…” “maybe…”), or simply by admitting uncertainty. These are very helpful signals that let us know when to be more careful about trusting what someone is saying.

AI systems however, rarely do this — they can sound equally confident whether they’re giving you the population of Tokyo (which they probably know) or making up a detail about a case study (which they definitely don’t know). That’s why detecting a mistake or a “lie” in these cases can be extremely hard.

#2

On top of this, users are more likely to assume the AI will perform better while feeling a deeper connection to it. So we end up trusting it based on how it feels rather than how well it actually works.

The industry calls this trust calibration, which is about finding the right level of trust so that users rely on AI systems appropriately, or in other words, in just the right amount based on what those systems can actually do. This is no easy feat in general, but because AI often sounds confident while being opaque and inconsistent, getting this balance right is extremely challenging.

So how are companies currently attempting to solve this calibration problem?

The limits of current solutions

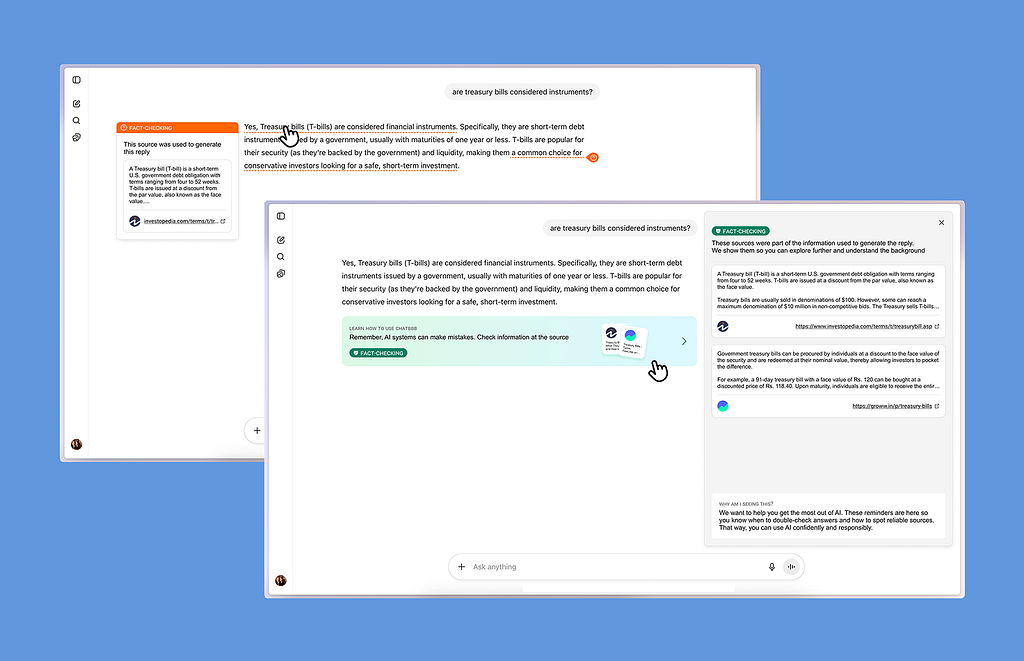

As a solution, there’s a lot of talk around explainability. This refers to turning AI systems’ hidden logic into something humans can make sense of, helping users decide when to trust the output (and more importantly, when not to do so).

Yet, this information only appears spontaneously in scenarios like medical or financial advice, or when training data is limited. In more routine interactions — brainstorming, seeking advice — users would need to actively prompt the AI to reveal the reasoning (as I had to do with Claude).

Imagine constantly interrupting a conversation to ask someone where they heard something. The chat format creates an illusion of natural conversation that ends up discouraging the very critical thinking that explainability is meant to enable.

Recognising these challenges, companies implement various other guardrails: refusal behaviours for harmful tasks, contextual warnings for sensitive topics, or straight up restriction of certain capabilities. These aim to prevent automation bias: our tendency to over-rely on automated systems.

These guardrails, tho, have significant limitations. Not only are there known workarounds, but they fail to account for how these tools are actually used by millions of people with vastly different backgrounds and technical literacy.

The contradiction becomes obvious when you notice where warnings actually appear. ChatGPT’s disclaimer that it “can make mistakes. Check important info” sits right below the input field, yet I wonder how many people actually see it, and of those who do, how many take that advice. After all that effort to anthropomorphise the interface and create connection, a small grey disclaimer hardly feels like genuine transparency.

Companies invest heavily in making AI feel more human and trustworthy through conversational interfaces, while simultaneously expecting users to maintain critical distance through small warnings and occasional guardrails. The result is that these become another form of false reassurance allowing companies to claim plausible deniability while essentially paying lip service to transparency and trust.

Scaffolding over crutches

This reveals a fundamental flaw in the current approach: they’re asking users to bear the weight of responsible use while providing tools designed to discourage the very scepticism they require. This, not only contradicts established UX principles about designing for your users’ actual capabilities and contexts, but also ignores how trust is actually formed.

In fact, trust isn’t built through one single intervention, but rather systematically across many touchpoints. So how might we approach this problem differently?

A first step, I believe, would be ditching the seamless approach and rethinking friction. What if, instead of treating transparency as friction to reduce, design treated it as a capability to build upon? Instead of hiding complexity to fast-track utility, interfaces could gradually build users’ ability to work effectively with AI systems — eventually teaching them not only how to use them responsibly, but when to trust them as well.

As a parallel, think scaffolding versus crutches. Current AI systems function more like crutches — they provide so much support that users become dependent on them. Users lean on AI for answers without developing the skills to evaluate them, and much like actual crutches, this helps in the moment but prevents underlying capabilities (critical thinking, in this case) from getting stronger over time.

Designing transparency as scaffolding

In a scaffolding model instead, AI systems could be much more flexible and adaptable so to surface transparency and guidance based on the user’s developing skills and the stakes of the decision.

For example, we could imagine having different modes. A “learning mode” could surface uncertainty more explicitly within responses — alerts prompting users to verify claims the AI cannot back up directly, or inviting users to take answers with a grain of salt. This could happen in expandable sections so as not to intrude on the conversation flow, and as users interact with these components, the interface could gradually reduce explicit prompts while maintaining the underlying safeguards.

For high-stakes decision, the interface could default to maximum transparency, like for example requiring users to verify factual claims with external sources before accessing final outputs. Visual indicators could distinguish between trained knowledge, recent search results, and generated examples, helping users understand where information comes from.

This approach would treats AI as temporary support that builds user capabilities rather than replacing them, and instead of optimising for immediate task completion, scaffolding design would help fostering long-term competence by helping users develop verification habits and critical thinking skills.

A trade-off worth making

Much of this goes against conventional product design principles around maximising ease of use. Adding these steps and indicators might seem like deliberate obstacles to user engagement… because they are, but that’s the point.

The friction introduced in this case, would serve a different purpose than arbitrary barriers — it’s protective and educational rather than obstructive. If designed mindfully, friction can help users treat AI tools as scaffolding rather than crutches, by developing the judgment skills needed to work safely with these systems.

That conversation with Claude taught me something crucial about the gap between how these systems are presented and what they actually are.

We face a choice between immediate utility while undermining our critical thinking, or building people up rather than making them dependent by accepting some friction as the price of maintaining our ability to think independently. The path forward isn’t avoiding AI, but demanding better design that teaches us to use these tools wisely rather than depending on them entirely.

Footnotes

¹ I’m aware that my example here is a pretty silly one compared to the amount of misinformation, bad advice and just factually incorrect tidbits people are potentially exposed to everyday through these interactions. But aha moments work in mysterious ways

Suggested reads

– Co-constructing intent with AI agents by TenoLiu

– The Psychology Of Trust In A World Where Products Keep Breaking Promises by Mehekk Bassi

– Designing for control in AI UX by Rob Chappell

Building trust in opaque systems was originally published in UX Collective on Medium, where people are continuing the conversation by highlighting and responding to this story.

This post first appeared on Read More