AI interface: When intelligence outgrows its container

How AI redefines user interfaces, and why the chat box gets it wrong

In 1989, Alan Kay published User Interface: A Personal View, building on Marshall McLuhan’s idea that “the medium is the message.” A medium doesn’t just carry information, it reshapes how we think. To make sense of it, we first internalize the medium.

An interface, then, is a cognitive framework.

Today, AI interfaces are shifting fast: from chat to voice, from canvases to agent-driven cross-tool spaces. As models converge in capability, the real differentiation has moved to the interface layer. The interface is no longer just a channel. It is the key that unlocks technology, translating complexity into affordances and shaping the flow of human attention.

The collapse of interaction

Before large language models, our typical path to finding answers online looked something like this: break your question down into keywords (like pizza, recipe, tutorial) and feed them to a search engine.

Then you wade through a long list of results, browsing page by page, clicking on a few promising links to read carefully, and finally mentally stitching those fragments together into your own version of the answer.

In contrast, with conversational AI products, I simply say: “Give me the perfect pizza recipe and instructions,” and the AI immediately serves up a curated answer. If it’s not quite right, I add a couple more sentences, the AI continues to refine or rewrite, and soon I’ve iterated to a final answer.

So with AI’s intervention, we can see two major shifts in product interaction paradigms:

From command-based to intent-based

Traditional interfaces relied on a turn-taking loop: input → output → user judgment → next command. Now, instead of issuing step-by-step instructions, the user states the goal, and the system handles the process behind the scenes.

From process-driven to outcome-driven

Interaction used to feel like a visible journey, where users accumulated knowledge and built judgment along the way. Today, much of that process is folded into the backend. Interaction becomes a cycle of prompting and refining around the result itself. The user’s main task is to evaluate the output, while the system dynamically connects the dots based on intent.

The unmoored chat box

Interestingly, most AI products have converged on the same choice: a chat box. The reason is obvious — chat feels natural, with virtually zero learning curve. Prompts unfold as back-and-forth conversation, offering high interactivity, tolerance for errors, low barriers to entry, and almost unlimited flexibility. It’s the perfect vehicle for mass adoption.

This is why Sam Altman wants to build ChatGPT into a Personal Life OS. In that value narrative, the experiential intuitiveness and multimodal extensibility of conversational interaction is the solution for vast life scenarios.

But there’s another, less visible factor: LLMs are inherently squishy. The same intent, placed in slightly different contexts, can produce different results. Therefore, ensuring that a setting always corresponds to stable, reproducible effects is a thankless task.

As a result, AI products often bury parameters deep in the system instead of exposing them as explicit controls.

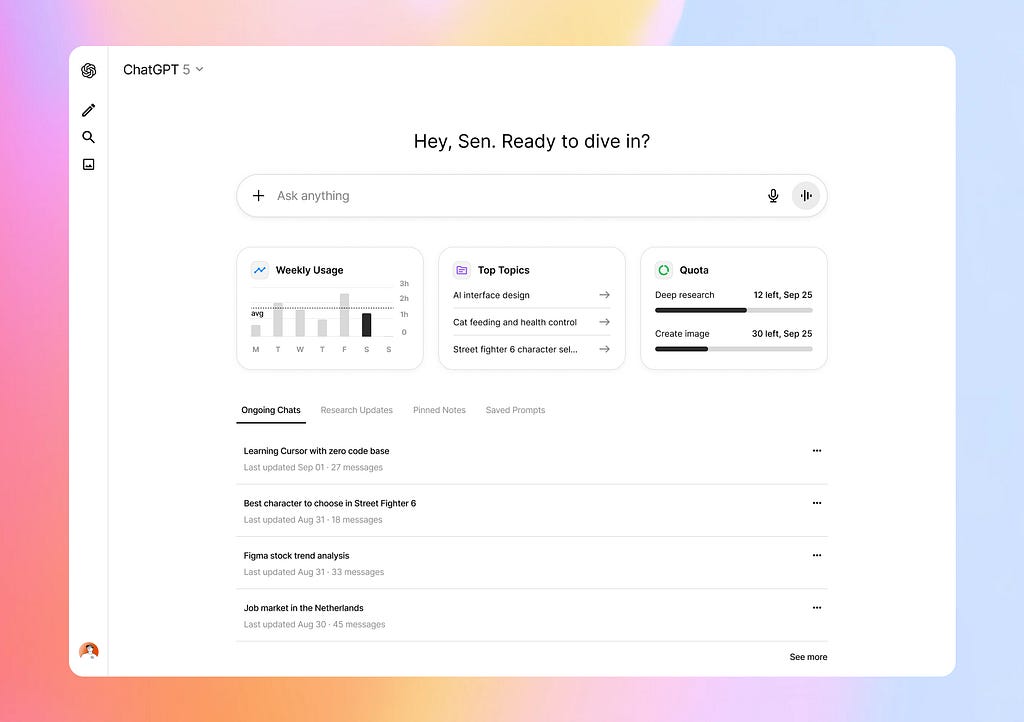

Take ChatGPT as an example. Beyond plain text, it can also produce tables, images, charts, code snippets, videos, and content cards.

One way to unlock these formats is to be explicit in the prompt — say, asking the AI to present the answer as a flowchart. But this puts the burden back on the user, who now has to master structured prompting and understand how content should align with different formats.

The second is to let the AI decide, having the system choose the appropriate presentation based on the generated content’s characteristics. This points to a major trend in future interfaces — Generative UI.

In this model, AI doesn’t just generate content, it simultaneously decides and renders the most fitting presentation container, truly merging output with interface for genuine personalization.

But before Generative UI becomes reality, the chat box stays out of control.

Category error

Dave Edwards, founder of Artificiality, offered a thought-provoking metaphor that most digital products fall into one of two categories:

Windows: they connect users to external information and resources — think Tripadvisor, Airbnb, or Spotify.

Rooms: they provide spaces for work, collaboration, and creation — tools like Figma, Photoshop, or PowerPoint.

If we map AI users along a proficiency spectrum: there are beginners, intermediate users, and power users.

Conversational interfaces naturally cater to the first two groups. They brand the system as a “no learning required” product. Perfect for newcomers, right? But for power users, the chat box strips away control panels and leaves only an open-ended input field.

Analyze this software licensing agreement for legal risks and liabilities.

We’re a multinational enterprise considering this agreement for our core data infrastructure.

<agreement>

{{CONTRACT}}</agreement>

This is our standard contract for reference:

<standard_contract>{{STANDARD_CONTRACT}}</standard_contract>

<instructions>

1. Analyze these clauses:

- Indemnification

- Limitation of liability

- IP ownership

2. Note unusual or concerning terms.

3. Compare to our standard contract.

4. Summarize findings in <findings> tags.

5. List actionable recommendations in <recommendations> tags.</instructions>

That’s why you’ll see one user writing a 300-word structured prompt with XML tags, while another asks “How many shapes of McNuggets are there?”

The problem is that many AI products are designed as “windows” with a clean input box that seems able to carry any conversation. But in terms of technical capability, they’re absolutely “rooms” capable of supporting deep creation.

And here lies the category error: users with complex needs require explicit functions and sufficient control, yet find themselves trapped in a “window.”

So people improvise:

They buy prompt templates.

They use Raycast to manage and trigger prompts.

They spin up endless new chats every day, stack them in the sidebar.

They save outputs into notes — then bring them back into the chat again.

The intelligence isn’t limited, the interface is what cages it.

As AI systems mature, the real question is not simply which interface will dominate, but how we will negotiate agency between human and machine. That balance between outcome and process, freedom and control will define the next era of design.

📖 Further reading

- User Interface: A Personal View — Alan Kay

- AI: First New UI Paradigm in 60 Years—Jakob Nielsen

- Squish Meets Structure — Maggie Appleton

- The Design Illusion of LLMs — Dave Edwards

- Chat is: the Future or a Terrible UI — Luke Wroblewski

- Conversational Interfaces: the Good, the Ugly & the Billion-Dollar Opportunity — Julie Zhuo

AI interface: When intelligence outgrows its container was originally published in UX Collective on Medium, where people are continuing the conversation by highlighting and responding to this story.

This post first appeared on Read More