Keeping UX human: Balancing the tradeoffs of AI in design

AI is being adopted into modern design workflows. AI tools helping with content generation, ideation, and even prototyping are rapidly evolving, becoming essential in all parts of the design processes. Its use increases the efficiency of design teams, both big and small, enhancing how we, as designers, create, think, and problem-solve.

However, AI also has its downsides. A study interviewing 20 UX professionals found that the advent of AI has brought about varying degrees of concern regarding the integration of AI in the design process. While some believed that there is nothing to worry about, others expressed that UX design should be user-centered, and letting AI take a central place in the design process could result in outcomes that don’t align with the users’ needs and goals.

Personally, I think AI is here to stay, and it can be a great tool to increase productivity in the design process, but only if the user-centric nature of UX design is maintained. At its core, UX must always be ‘human’ and in the ever-evolving landscape of AI and design, intentional practices must be taken to counterbalance the tradeoffs that come with using AI.

The double-edged sword of AI in UX

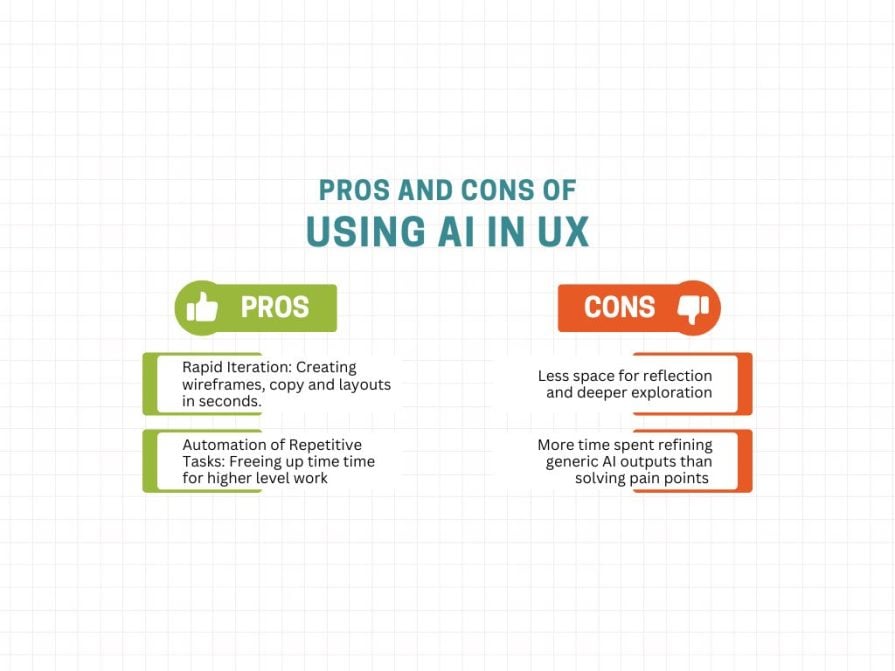

AI tools can shorten the distance between idea and product, but they can also flatten creativity and create distance between the designer and the user. To achieve the right balance, we need to understand what AI is good for in design as well as when it should take a backseat — in other words, where it does and doesn’t belong.

Some benefits of using AI tools include rapid iteration and automation. AI tools for creating wireframes, copy, and layouts in seconds have made it faster for me to go from idea to prototype. I can create and test many iterations of an idea, then use that data to create the final product. Additionally, AI tools allow me to automate boring and repetitive tasks like creating multiple variants of UI components, creating mockups with placeholder content, and summarizing user research, saving me time I can invest elsewhere.

However, the importance of iteration isn’t measured by how quickly an idea can be produced, but rather in the reflective process leading to the final product. Understanding why an iteration is good through assessing various wireframes, trying different copy, and experimenting with different layout options is the type of work that leads to truly great user-centric design.

Focusing instead on refining and improving decent AI outputs takes away from the in-depth reflection that comes from addressing real pain points and prioritizing the user. That’s why the initial creation of the layout and wireframing has ended up on my “never delegate to AI” list of tasks.

While I’m more than happy to delegate boring tasks like generating filler content and copy to AI, the layout and wireframe have a different level of importance, at least in my eyes.

See, content can be changed over time to better fit the project requirements, but the initial layout and wireframing are where the ideas really start flowing for me. That part of the process is where I ask myself key questions like: who is the primary user? What are their goals and pain points? Can users immediately tell what this page is about? Can users immediately find what they’re looking for in three clicks?

These questions make me really think about the user, how the product can best serve them, and usually set the tone for the rest of the product, so leaving that to AI would take away a major part of my own design process.

AI is definitely affecting UX, but not how you think

AI has tradeoffs, and they aren’t just theoretical. On the human side of UX design, AI is negatively impacting three main aspects in today’s design landscape.

Real user insights

I’ve seen AI be used to automate part of the research process; hell, I’ve been part of the problem. Things like summarizing user interviews and organizing user feedback are so easy when you have an AI tool that lets you input data and quickly analyze it. However, even though this speeds things up, it sometimes removes much of the context that helped me and other designers understand the user’s motivation.

For example, while designing a mobile banking app a while back, my team and I had usability tests done with a small pool of 5 participants for an early version of the prototype at the request of some stakeholders. It was a bit too early in the process, but they wanted to know how some users might react to the product early on, and I guess feel more confident in their investments. So we obliged.

I had AI summarize the transcripts of the tests and asked it to outline key pain points and patterns that we could work from page by page. While the AI was somewhat helpful, after looking back at these interviews, I found a small issue in the confirmation screens for sending money to another user.

Part of the test involved sending money (obviously fake) to different users and making sure there was no confusion. This was easy to do when transferring to the first user, but once a second one came into the mix a lot of users took a bit longer to do so as they had to go back and check that they were sending it to the right person.

That’s when I realized we had forgotten to include confirmation screens. A basic mistake that would have probably been caught later in the design process, but AI didn’t notice it at all, nor flag it. Why? Because it can’t analyze mannerisms the way humans can.

So now, while I can use AI to analyze some basic information first so I have a broad overview, I always make sure to directly view user interviews myself, because there’s always going to be something I can see that AI misses.

Deep, original thinking

When I’ve used AI to generate wireframes or layouts, for example, I’ve found that the results always look good, but tend to be the same. While that’s not always a problem, there are established layouts for a second; all designs quickly become generic if you use these AI tools too much. It’s easy to accept such outputs as good wireframes or layouts without thinking about what actually makes them good for the user.

So, to deal with this, I put using them on my “never delegate to AI” list as previously mentioned. Now, the title of this list is a bit misleading; it doesn’t mean that I never use AI tools to create a wireframe. Instead, I do the work first, determine the layout, draw out a wireframe, ask myself important questions, come up with a couple of iterations, and then, and only then, do I use AI to polish some of my ideas just to get the ball rolling faster. So I guess it would be more accurate to call it my “never delegate to AI first” list.

Team connection

AI tools are great for ideas and brainstorming; in fact, I’d argue it’s the best way to use AI. However, team brainstorming sessions, a collaborative environment where a UX team comes together to come up with ideas, can get pretty dry when everyone’s just regurgitating AI outputs at each other. The team debates less, there’s less creativity, and fewer perspectives are shared, but everyone has the same perspective, and that’s whatever the AI told them.

To deal with this, my last team lead instituted an electronic ban during all brainstorming sessions. Only they had access to the presentation and the internet and could search things as needed, but everyone else had a pen and paper to take notes or work through some ideas. While I’m not sure whether this was the most effective way of doing things, it did force all of us to actually think about the problem and give ideas before relying on the crutch that is AI.

| Human aspect impacted | Problem | Outcome of using AI |

|---|---|---|

| User insight | In order for a product to solve a problem, there must be a genuine understanding of the user and their needs. This can only be done by listening to the needs of real users. | Using AI to automate tasks such as summarizing user research means that a lot of context needed to understand the user and their problems can be lost. |

| Deep thinking | Doing an in-depth analysis of user insights and slowly reflecting on user problems can result in creative problem-solving and unique designs. | Using AI to generate quick outputs can create viable solutions for users. However, these solutions are often surface-level and generic, separated from the user. |

| Team connection | Design sprints and other creative brainstorming activities are central parts of the ideation process in many design teams. | Using AI to handle ideation can result in less collaborative brainstorming, leading to fewer shared insights and meaningful debates. |

Ultimately, the danger isn’t that AI takes our jobs, as tools created to perform specific tasks, AI lacks the necessary understanding of human psychology required to solve a user’s problems. Instead, the uncontrolled use of AI risks undermining the foundational aspects that make UX design so human and meaningful. Namely, user insight, creativity, and teamwork.

Human first and AI second

Rather than outright rejecting the use of AI in UX, it’s important to properly utilize the efficiency that these tools provide while preserving the foundational design work. To that end, there are four main strategies to ensure that UX remains as human as possible within design teams:

Prioritizing human research

AI can be great for summarizing notes, transcripts, and survey responses, but these summaries shouldn’t replace actual user interviews and direct engagement with the users. User insights come from listening to users and their problems, not reading AI-generated bullet points of what the machine thinks users need.

Actionable steps:

- Include human evidence for all major decisions. Design decisions that will impact the user have to be made based on interviews, usability tests, user feedback, or designer analysis, not AI

- Keep an AI intervention log. Track when AI was used to gather insight, organize data, or summarize information, then have a team member review and validate that information

- Separate AI assumptions from human evidence. In your design notes, clearly state what came from AI and what came from reliable human research. That will help teams not make decisions based on unverified AI outputs

AI-free sessions

In order to preserve creativity and collaboration in design teams, making space for non-AI brainstorming and problem-solving sessions is important. This will create space for deep thinking and creative problem-solving.

Actionable steps:

- Create a brainstorming schedule that balances human input and AI use when starting a new project:

- Day 1: Computer-free brainstorming and ideation process. The team is introduced to the project, the problems and pain points are established, no AI insight

- Day 2: This is where AI can be used to organize and expand on the ideas from day 1. These ideas are then organized in smaller team presentations to present on day 3. AI tools are useful; they shouldn’t be completely eliminated

- Day 3: These presentations are reviewed by humans, and the team is encouraged to discuss each other’s presentations

- Day 4: Tasks are allocated based on the various ideas. This depends on each team and how they do things

It’s also important to note that this doesn’t necessarily have to take 4 days; it could happen all in one day, or a couple of weeks, depending on the team’s overall workflow.

Establish guidelines

Deciding on team guidelines for the use of AI can go a long way in maintaining the balance between its benefits and tradeoffs. Requiring UX research to analyze all AI outputs, involving human judgment in all critical design decisions, and refining AI-generated content with real user insights are a few core principles that all design teams should follow. This will also make designers comfortable with challenging AI outputs and feel more secure in their importance over these tools.

Here are two basic checklists I’ve created that can be used as basic guidelines:

- Human review of AI outputs

- [ ] Has this AI output been reviewed by a UX researcher or relevant team member?

- [ ] Are there direct user insights or research supporting this output?

- [ ] Have potential biases or errors in the AI output been identified?

- [ ] Does this output align with accessibility, ethics, and design standards?

- [ ] Has a human signed off on the final decision or refinement?

- [ ] Are all changes from AI suggestions documented for transparency?

- AI decision checklist

- [ ] Has this suggestion been validated with real user data?

- [ ] Could acting on this output affect accessibility, ethics, or inclusivity?

- [ ] Would a human expert challenge this recommendation if it were wrong?

- [ ] Is this output being used as inspiration or for direct implementation?

- [ ] Are risks and assumptions clearly documented?

- [ ] Has the team discussed and agreed on the next steps?

It’s important to note that these checklists shouldn’t make up your guidelines, but instead be treated as jump-off points for design teams to start establishing proper AI usage guidelines.

Encourage discussion

Who better to determine how and to what extent AI tools should be present in their work than designers themselves? Creating spaces for discussion (e.g., workshops) where designers can openly discuss what tasks should or shouldn’t be replaced with AI will open up the floor for meaningful conversation. UX involves the collaboration of designers to solve user problems; applying this same teamwork to this issue could result in unforeseen insights.

A basic workshop could work as follows:

- Introduction — An introductory presentation explaining the importance of balancing the use of AI in UX, explaining many of the points described in this article

- Solo brainstorming — All individuals list out what tasks they do that AI helps them with, and rank the extent to which AI is involved in that task

- Discuss — The large group is split into smaller units. These units share the tasks they have written and discuss them. They then organize the total list of tasks into three categories: should always be human, can be AI-assisted, can be fully AI automated. When doing this exercise, everyone should give reasons for their thoughts and write out a group list and explanation that will be collected and analysed by the workshop givers later

- Debate — The team comes back together for a workshop leader-led debate where one task is analysed based on ethics, creativity, and user impact. For example, the task wireframe is provided, and designers are asked whether using AI here could harm users or introduce bias, whether its use would limit creativity, and how its use can impact the user experience

- Actionable suggestions — Ask designers for potential next steps, guidelines that could be implemented, and who should be responsible for making sure these guidelines are followed

Ideally, the insights created from these workshops should be recorded for future use in shaping AI use guidelines and monitoring the use of AI across different projects. Moreover, having regular check-in sessions to discuss with designers what is and isn’t working throughout the year will encourage ongoing conversations and help further improve policies.

Conclusion

Designers shouldn’t avoid using AI tools in their design processes; they have too many advantages to ignore. If used effectively, these tools can help us process large amounts of data, accelerate the ideation process, and make it possible to efficiently cycle through multiple ideas in a short amount of time. However, the overuse of AI risks the creation of hollow products that fail to reach the hearts of our users. In order to mitigate the impacts to the human aspects of UX design brought about by AI it’s important to ensure that all designs remain as human as possible by employing the key strategies mentioned in this article.

While these strategies are geared towards design teams, they can also be applied to individual designers. Individuals can also prioritize human research, carve out AI-free sessions, establish guidelines, and think about the appropriate way to use AI in their workflows. Ultimately, UX designers should treat AI tools as copilots, tools to explore multiple ideas and options more efficiently, while using user insights, creativity, and teamwork to create solutions that will solve user problems.

The post Keeping UX human: Balancing the tradeoffs of AI in design appeared first on LogRocket Blog.

This post first appeared on Read More