How to use AI as a sparring partner in your ideation process

Over the past six months, I’ve worked with 18 product teams on customer discovery, idea generation, and testing. Each of these teams used various artificial intelligence (AI) tools and large language models (LLMs), but three of them massively outperformed the other 15.

The difference wasn’t access to better tools. The top teams used LLMs to sharpen their thinking and move faster on decisions they already understood. The other teams leaned on AI-generated answers without applying their own judgment.

As a result, the struggling teams produced more output but less impact. They generated ideas quickly, but lacked a clear bar for quality and exhausted themselves iterating on weak assumptions.

AI can accelerate innovation, but it doesn’t replace product leadership. Strong outcomes still depend on a PM who can frame problems, evaluate tradeoffs, and decide what good looks like.

Here’s what the top three teams did differently.

The core principle: Solo first, AI second

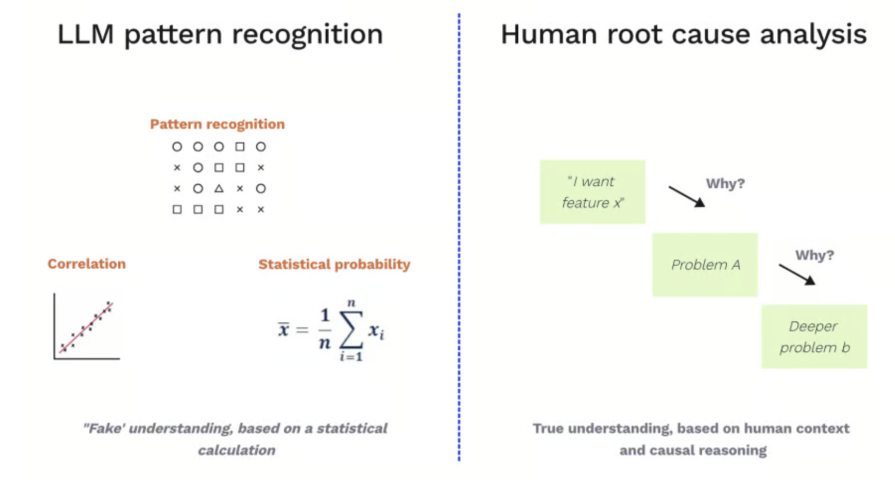

LLMs are statistical pattern matchers, not thinkers. They don’t have lived experience, emotions, or the ability to develop product sense.

They’ve never felt a user’s frustration with a broken workflow or navigated the constraints of your specific market. LLMs excel at synthesizing patterns from what already exists and producing an average of past solutions:

That’s rarely what you want when you’re searching for differentiated solutions. The top teams understand this and design their workflows accordingly by:

- Using their own brains first, and then using AI as a sparring partner

- Creating an environment to allow LLMs to do their best work

- Having clear mental models for deciding what good looks like

When teams outsource ideation to AI, they weaken their product judgment over time. Generating ideas yourself, including the bad ones, builds pattern recognition for what works in your specific context and makes future decisions stronger.

The four-phase ideation process

To make AI useful during ideation, you need more than better prompts. You need a clear process. This four-phase approach shows how to combine your judgment with LLMs in a way that produces better ideas, not just more of them.

Phase 1: Prerequisites

Before generating a single idea, align on the fundamentals. This context should be explicit and shared with both your team and any AI tools you use:

- Vision, business goals, and product metrics

- Ideal customer profile (ICP) definition

- Explicit boundaries (where you won’t play, tactics you won’t use, budget constraints)

Skipping this step leads to ideas that look compelling but fail basic viability checks.

AI quality depends on input quality. If your vision, strategy, or constraints are unclear, your outputs will reflect that. Treat this context as non-negotiable setup work, not overhead.

Phase 2: Build deep problem understanding

With your prerequisites out of the way, compile your knowledge and evidence into a “must-read” document ahead of the ideation session. LLMs are good at making sense of unstructured data, but also notorious for pulling out the wrong insights, or even fabricating quotes or entire people.

Most teams dump unstructured data at an LLM and expect magic.

The top teams structure their qualitative data obsessively, for example:

/interviews

/[ICP_segment]

/[participant_name]

– notes.md (my handwritten insights from the call)

– transcript.md (full transcript for context)

/test_results

/[test_name]

/[participant_name]

– notes.md

– results.md

If you’ve used AI note-taking tools like Dovetail, Fathom, or Granola, you might have noticed that the “key takeaways” the AI pulls out usually aren’t the most important things to note.

Because of this, I train teams to always take notes by hand and spend time after the call to re-assess their notes with other teammates on the call.

Your notes.md captures what you found interesting. LLMs treat these notes as the primary signal, using transcripts only for context and direct quotes.

Tools that work:

- Notion AI

- Cursor

- Claude Code + local files

- Windsurf

The specific tool matters less than the structure you use. Clear organization and explicit signals produce better outputs.

LLM’s excel at tasks like compiling digestible pre-reads from your qualitative data, surfacing patterns across interviews, and pulling exact quotes corresponding to the specific opportunities or pain points you’re looking to ideate solutions for. However, you still need to identify what matters, and critically check the output.

Phase 3: Generate ideas (solo, then amplified)

Teresa Torres, a leading product discovery coach, frequently cites the research that individuals working alone tend to generate more diverse ideas than groups brainstorming together. Six people ideating solo for an hour consistently outperform six people in a room.

I design ideation sessions around that insight.

The process I use:

- Every participant blocks at least two hours for solo ideation

- Generate your first five to 10 ideas without AI

- Only then introduce an LLM to:

- Challenge assumptions and surface gaps in reasoning

- Suggest adjacent approaches that may have been overlooked

- Generate variations on the strongest themes

Setting up your LLM subagent:

Dedicated LLM subagents work well here because ideation is a recurring task that benefits from persistent context.

I help teams create ideation agents with:

- Full business and product context from the prerequisites phase

- Access to customer research artifacts

- Explicit evaluation criteria, such as what matters most: impact, confidence, effort, or risk

The clearer the guidance, the better the output.

This isn’t a general-purpose LLM. It’s a junior (slightly unreliable, but funky) team member who’s been working at your company for years.

Ideation agent in practice:

In the video below, I explain how I built and use my ideation subagent. It can run as a standalone agent that outputs a text file or by a Claude code in a multi-step workflow:

You can fork the agent for yourself here.

Phase 4: Evaluate and select

In the final phase, bring your team together to evaluate, converge, and decide what to test next. I find the following three exercises effective.

Comparison and clustering:

Start with one person presenting their ideas. Anyone with a similar idea briefly shares theirs so the group can determine whether they’re truly the same or meaningfully different. This step often generates new ideas as themes emerge and gaps become obvious.

Dot voting:

Give each person three dots. They can stack all three on one option or spread them across multiple ideas. And remember, this isn’t life or death. You’re not choosing what to build, you’re choosing what to test.

The LLM check:

Add an LLM “voter” that has the same context as the team. Ask it to rank the top options and explain its reasoning. If it disagrees with the group’s consensus, treat that as a prompt to inspect assumptions, missing constraints, or unclear evaluation criteria.

Implementation checklist

When you implement an LLM subagent, review this checklist with your team to ensure it sets you up for the highest quality outputs:

- A dedicated project space containing full business and product context

- Access to a complete, well-structured customer research repository

- Explicit evaluation criteria grounded in your product principles

- Clear instructions to challenge assumptions, not simply validate ideas

- Examples of past successes and failures from your specific context

Treat this checklist as a baseline. Skipping these steps increases the likelihood of an output that lacks real product judgment.

Final thoughts

LLMs amplify whatever you feed them. When you feed them shallow or incoherent thinking, you end up scaling poor thinking. But when you feed them structured thinking, clear context, and lots and lots of human judgment, they can become force multipliers.

That’s what showed up in the opening example. The top three teams didn’t succeed because they used less AI. They succeeded because they relied on their own judgment first and then used AI to sharpen it.

Featured image source: IconScout

The post How to use AI as a sparring partner in your ideation process appeared first on LogRocket Blog.

This post first appeared on Read More