How UX designers can influence AI behavior (and why they must)

This article examines how generative AI is changing UX design, moving the focus from granular elements to holistic system behavior. With this shift, the focus from minor details to large-scale design, I argue that designers are now required to understand the fundamental workings of AI, particularly how data is processed, to influence its input and output effectively.

By understanding concepts like statistical mechanics — specifically microstates and macrostates — designers can better architect AI experiences that are helpful and intelligible.

Shifting our mindset as UX designers: From micro to macro

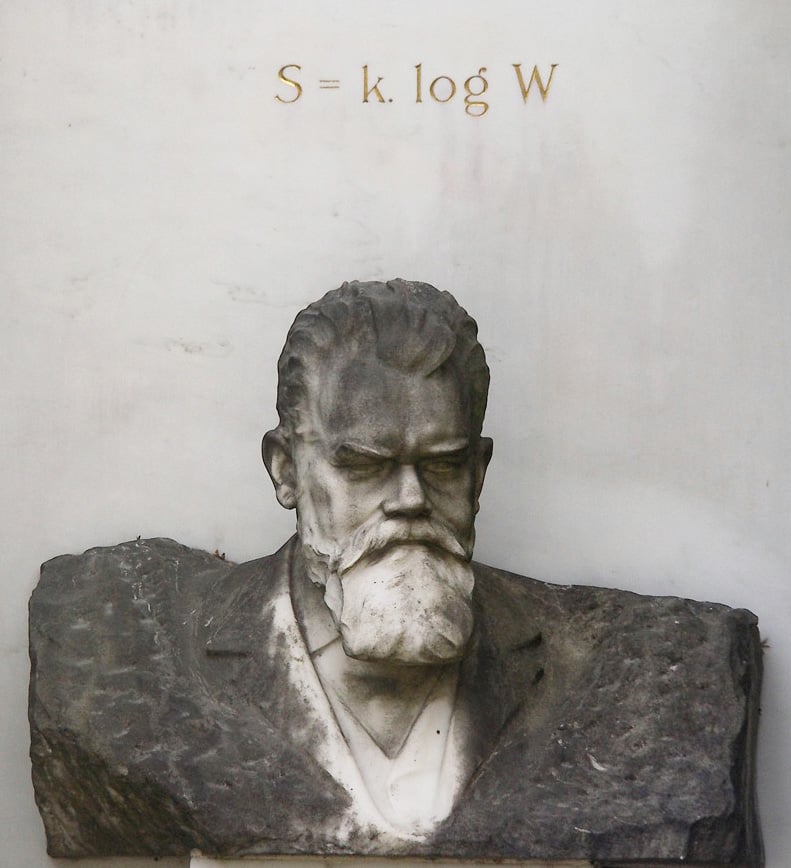

Ludwig Boltzmann rests in a Viennese cemetery. On his gravestone, the equation S=k.logW:

It’s quite something to have carved over your final resting place. To most of us, it’s indecipherable. But it’s Boltzmann’s formula for entropy — a deceptively simple expression with far-reaching consequences. What the formula implies is that the predictable world we live in arises from the sheer probability of countless configurations occurring at an unseen, atomic level.

Here’s a simple way to think of it. You don’t understand a cloud of steam by plotting every water molecule’s path. You look at the cloud itself, its overall shape, its temperature, and how it moves. Boltzmann gave us a way to connect these levels — the microscopic chaos (microstate), and the patterns we observe (macrostates), like the cloud.

This micro/macro distinction offers a useful way to think about designing experiences with generative AI. It gives us language to describe what we’re attempting. All the unseen code, the training data, the processing — that’s the teeming world of microstates. The user’s experience — whether it feels helpful or confusing — that’s the macrostate emerging from it all.

If you accept this view, then our job as designers has to shift. Our focus moves up a level. Instead of trying to pin down every possible output, every micro detail, we need to concentrate on architecting the desired macrostate. That means defining the overall patterns of behavior that help people, setting the ethical boundaries, figuring out how the system signals trustworthiness, and clarifying the goals it should pursue.

Why designers must influence AI behavior

Designers, product people — really, anyone building digital tools today — are carrying an extra bit of anxiety around. It stems from the non-deterministic, rapidly evolving tech we’re working with. It sounds dramatic, but it is potentially explosive in the wrong hands.

There’s no rewinding. AI functionality already appears in everything from simple apps to enterprise systems. It presents challenges to our established UX thinking, which prefers to assume fairly predictable user flows and stable displays of data. If designers limit their focus to this presentation layer and user interface, they risk losing influence over how AI systems behave.

The danger is that critical decisions — defining how AI works, how it behaves — will be made without the user in mind. It’s just too easy to get carried away with the power of an AI system.

That’s why, we, as designers, need to engage more with the mechanics of AI and steer this powerful technology toward more beneficial ends. Boltzmann’s idea fits surprisingly well here. UX is less about the surface and more about shaping the emergent behavior of complex systems.

And yes, Boltzmann faced fierce opposition in his time. Progress rarely comes without friction.

How UX can shape AI inputs, outputs, and behavior

We’ve seen that when tech is developed without a strong focus on the people who are using it, it often falls short, or worse, causes harm. AI systems, with their capacity for learning and generating complex outputs, magnify this risk. That’s why human-centered design is more vital than ever.

Designers contribute by ensuring AI systems are genuinely helpful. Through research and understanding user contexts, we can guide AI development towards solving actual problems effectively, avoiding implementations where the technology feels counterintuitive or obstructive.

Clarity is another key area. AI can be a black box. Designers can help define transparency standards — What is the AI doing? Why? How do we set the right user expectations?

What about ethics in all this? By asking questions about fairness, bias, privacy, and accountability, designers act as user advocates, helping build systems that aim for responsible operation.

We must shift attention from how information looks to how the system behaves — and aim to influence the macrostate. This means taking part in decisions about inputs, outputs, and behavior:

- Inputs — How does the system receive information and instructions? This includes designing clear user interfaces, but also understanding how different data sources affect outcomes. How is user information used for personalization, data framing, and user modeling? How much choice should we give users in customizing AI settings?

- Outputs — How does the system communicate its results? This involves the content and tone of AI responses, but also designing ways to show confidence levels, uncertainty, or sources of information

- Behavior — How does the system learn and adapt? Designers can help shape effective system feedback loops, guiding how the system learns to better achieve the intended macrostate, and guide personalization efforts to be respectful and beneficial to the user

New responsibilities and deliverables for AI UX

Moving from interface design to architecting AI behavior is a gigantic leap for us, yet possibly the most important step for the UX and design field. It requires a willingness to engage with even more complexity and improve our technical understanding:

| Current UX focus | Potential AI UX focus |

|---|---|

| User interface layout | System behavior and logic |

| Linear user flows | Dynamic scenarios and situations |

| Static content | Generative and variable outputs |

| Predictable interactions | Non-deterministic responses |

To support this expanded role, our methods of documentation and communication need to change. While traditional design artifacts remain useful, we will need to use and create new ones. As the scope of UX evolves, here is how our deliverables may reflect this shift:

| Current deliverables | Potential new deliverables |

|---|---|

| Wireframes and mockups | Prompt frameworks |

| Style guides | Transparency guidelines |

| User journey maps | Ethical review frameworks |

| Information architecture | AI behavior models |

| Usability tests | Variability tests |

| Content strategy | AI training data guidelines |

| Onboarding flows | AI expectation settings |

What UX designers should do next

So, what should we be thinking now?

Anxiety is natural — but making AI that truly works well for people, that is genuinely useful, depends on designers taking on this expanded future, even if the path isn’t smooth. It demands a shift in perspective. In a way, it’s like Boltzmann realizing that understanding the visible required grappling with the invisible.

He developed his theories while the Austro-Hungarian Empire was unraveling. We’re in a similar period of disruption.

For us, it means working closely with engineers, researchers, ethicists — always bringing the focus back to the human at the center. That’s the only way we’ll shape AI that helps, rather than harms.

Our task isn’t to control every output. It’s to architect the conditions — to design the probabilities — so the right macrostate emerges.

The post How UX designers can influence AI behavior (and why they must) appeared first on LogRocket Blog.

This post first appeared on Read More