10+ ways AI helps me validate ideas without interviews or surveys

The rise of AI has dramatically accelerated product development. Most people focus on how it speeds up design and development, but that’s only part of the picture. There are many more areas where AI can help you deliver faster. One of the most underrated is assumption validation. Testing hypotheses has never been easier.

Let’s take a look at a few ways AI can help de-risk your initiatives by validating assumptions early and often.

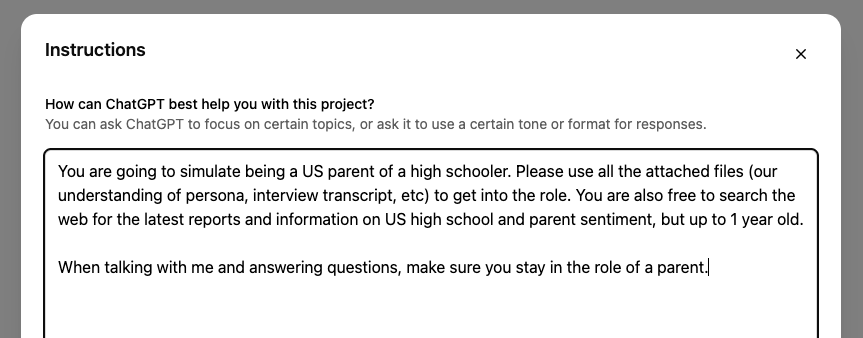

AI personas

One of my favorite ways to use AI is to build AI personas. I usually create a brand new project in ChatGPT and then:

- Provide as much context about the target group as possible

- Link to relevant research

- Upload transcripts from the last ~3 months of user interviews

As a result, I get a virtual representation of our target user — an always-on “ideal user” that I can query at any time. I ask for their opinions, identify their pain points, and determine whether my thinking aligns with their needs.

It’s an amazing tool — if you feed it quality data. The most reliable AI personas are based on loads of transcripts from actual user interviews.

A few of my favorite use cases of AI personas are:

Copy ideating and testing

If I don’t know what the perfect copy should be, I usually feed the AI persona with my doubts and ask for recommendations. Out of 10 responses, it produces one or two that are usually pretty worth refining. Look at this example:

Alternatively, I’ll give it an existing copy and ask, “What does this mean to you?” If the AI gets confused, chances are real users will too.

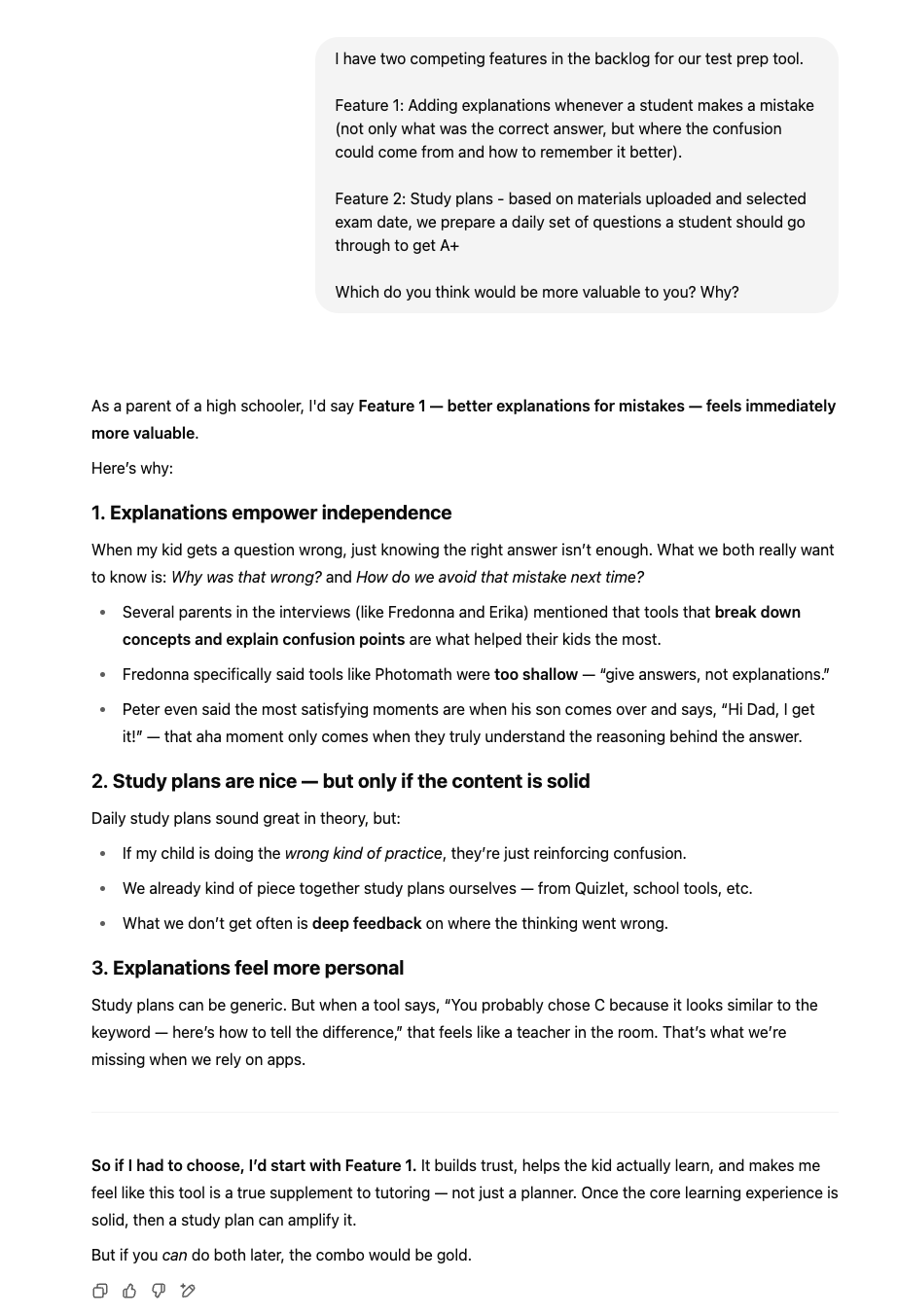

Prioritization

Sometimes I’ll show the AI persona our backlog and ask if the priorities make sense. Based on its feedback, I reconsider the priorities:

Exploration

I ask open-ended questions like:

Exploratory questions can trigger hallucinations, so I treat these more as inspiration rather than definitive insights. Still, they often reveal fresh angles worth exploring.

Validation

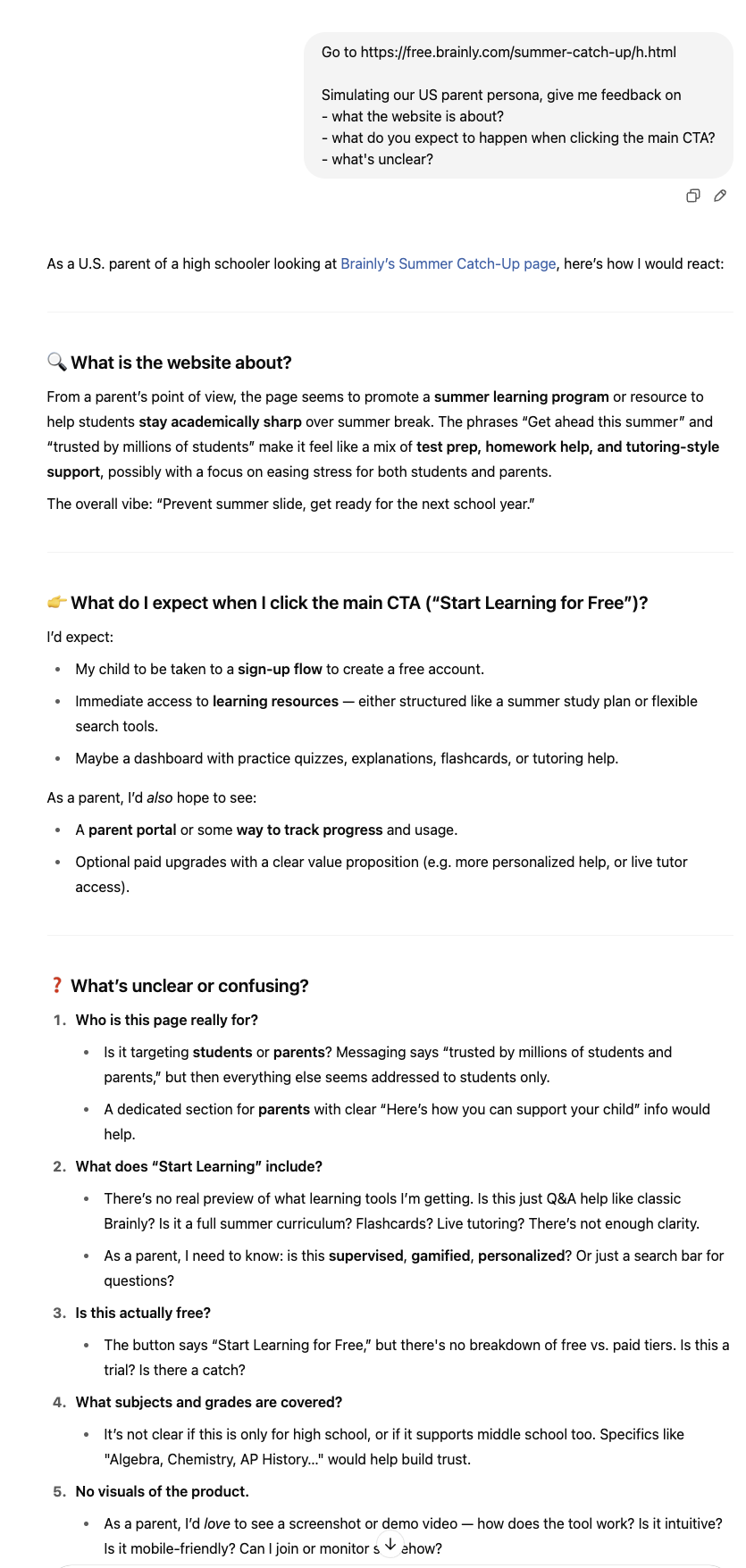

When I have an early concept or design, I always run it by the AI persona. It’s not a final decision-maker, but it’s a great way to get early feedback before involving users.

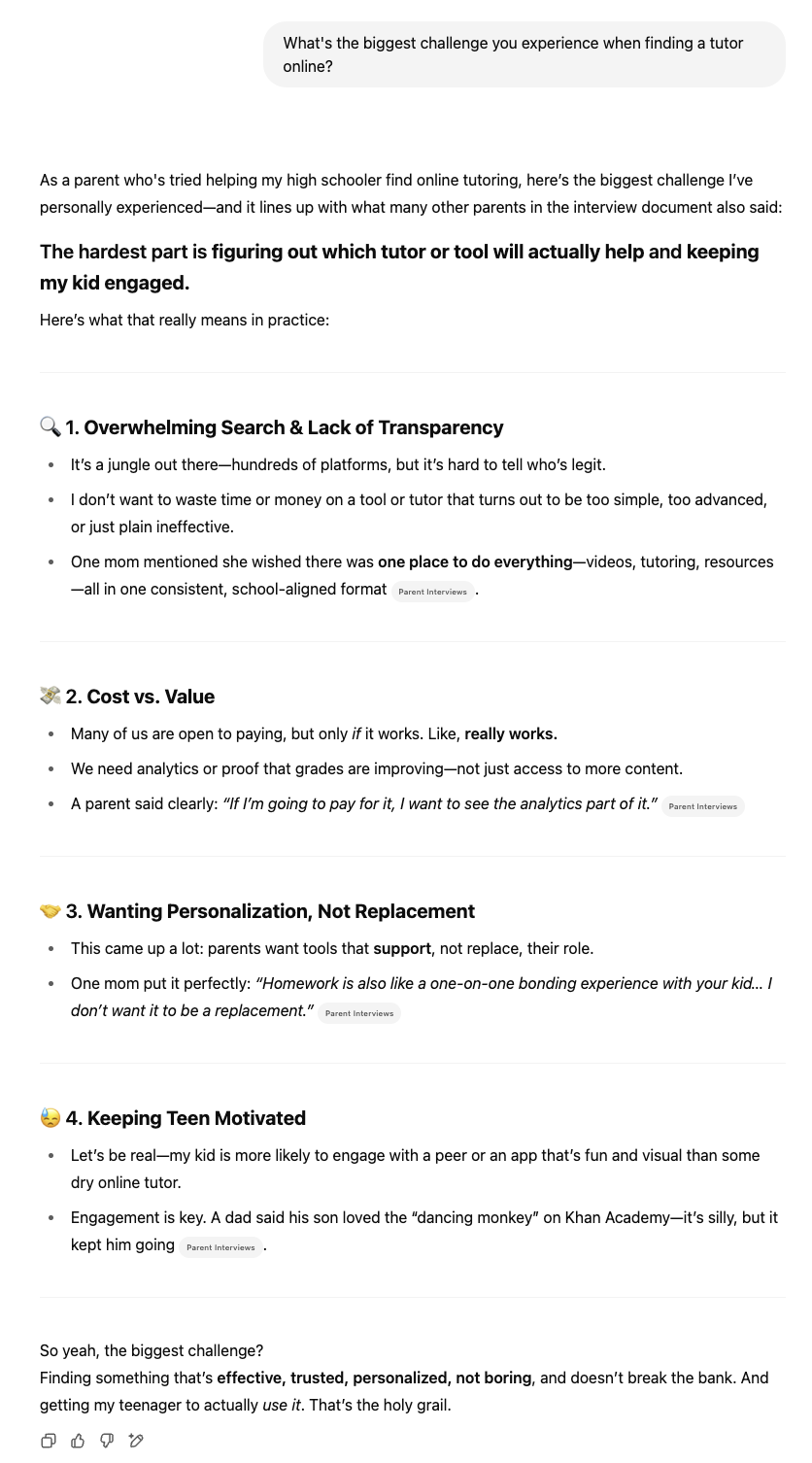

For example, when we were building a new landing page for parents, we weren’t sure how clear it was for target users. We asked our AI persona a few questions about what it thinks the website is about and what’s unclear:

It doesn’t replace actual user testing, but it provided us with numerous points of improvement and inspiration within seconds, allowing us to proceed to user testing with an already polished version.

Desk research

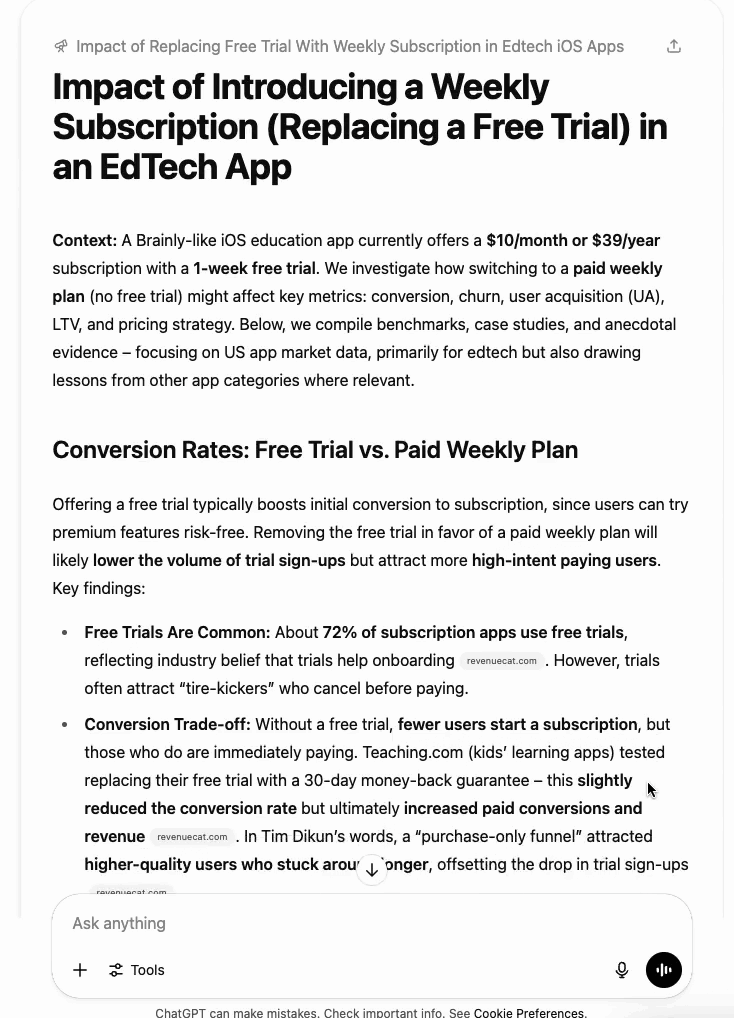

I used to hate desk research — hours spent googling reports and trends. But OpenAI’s Deep Research capability changed that.

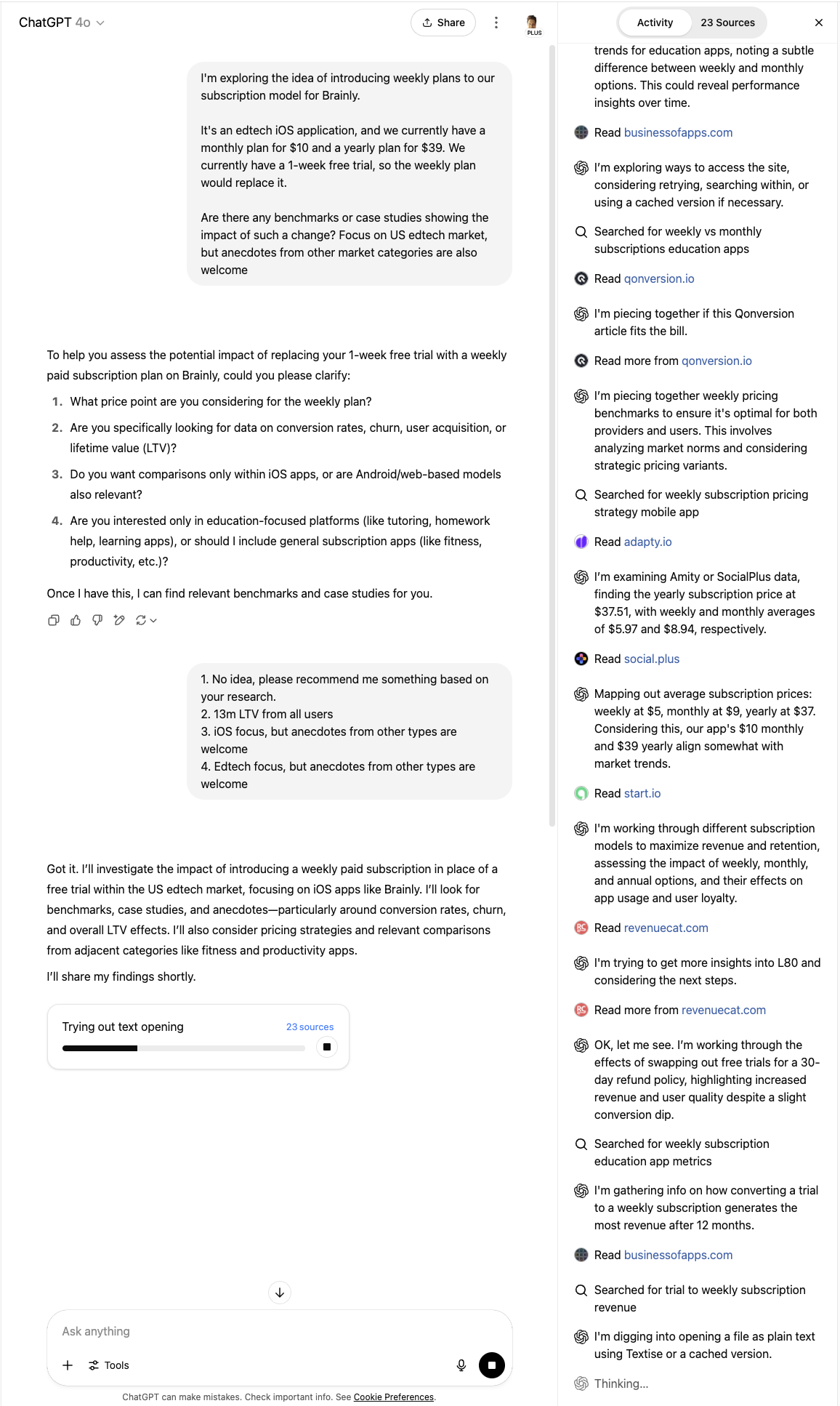

For example, recently we saw a trend where more apps have opted out of free trials in favor of weekly plans. It sounded interesting, but we didn’t have much grounding to figure out if that experiment even makes sense. So I used Deep Research mode.

All you need is a generic yet precise prompt — and if more input is needed, GPT in research mode always asks follow-up questions, so don’t worry:

Now, I use GPT as a desk research assistant. It doesn’t just spit out an answer in five seconds — it takes time to analyze multiple sources and synthesize something insightful:

Such a report would take me ~20 hours to complete. GPT did that in 7 minutes.

Survey results interpretation

Surveys are great for gathering quantitative insights — but a nightmare to analyze. That’s one of the reasons many teams underuse them.

Now I offload survey analysis to AI. Here’s how:

- Feed in the survey results (ideally as a .csv)

- Explain the context

- Ask open-ended questions like “What are the most surprising insights here?”

- Follow up with specific questions

Analyzing a 1000-response survey used to take me 2–3 days. Now, it’s a 30-minute job.

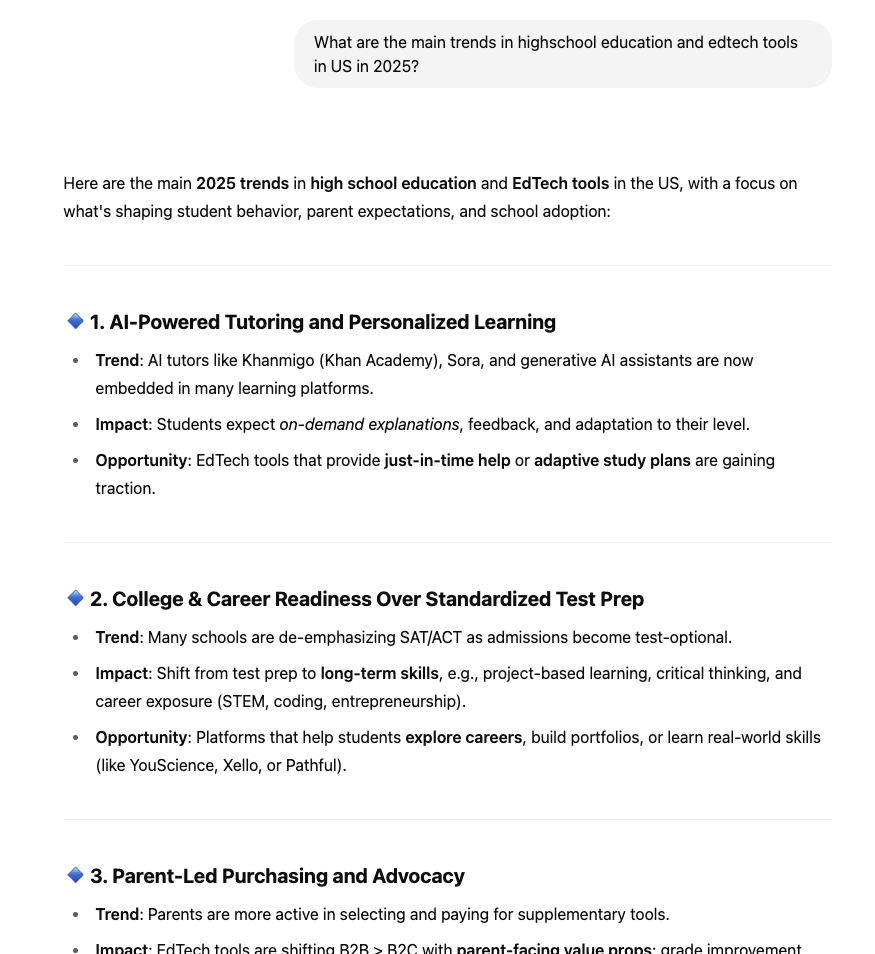

Trend analysis

AI helps me stay on top of industry trends in two ways:

Simple query

Just ask AI every two weeks or so to analyze all the latest news on a specific topic and highlight any meaningful changes and shifts:

Agentic AI

For more advanced use cases, you can build your own AI agent. I use one like that. Here’s how it works:

- I collect weekly news via — Google News (for specific keywords), Visualping.io (to track competitor site changes), and industry blogs

- Zapier exports everything into a .csv

- GPT API processes it and summarizes key trends

- Zapier sends me a summary via email

This way, I basically built a curated weekly newsletter with all the info I need.

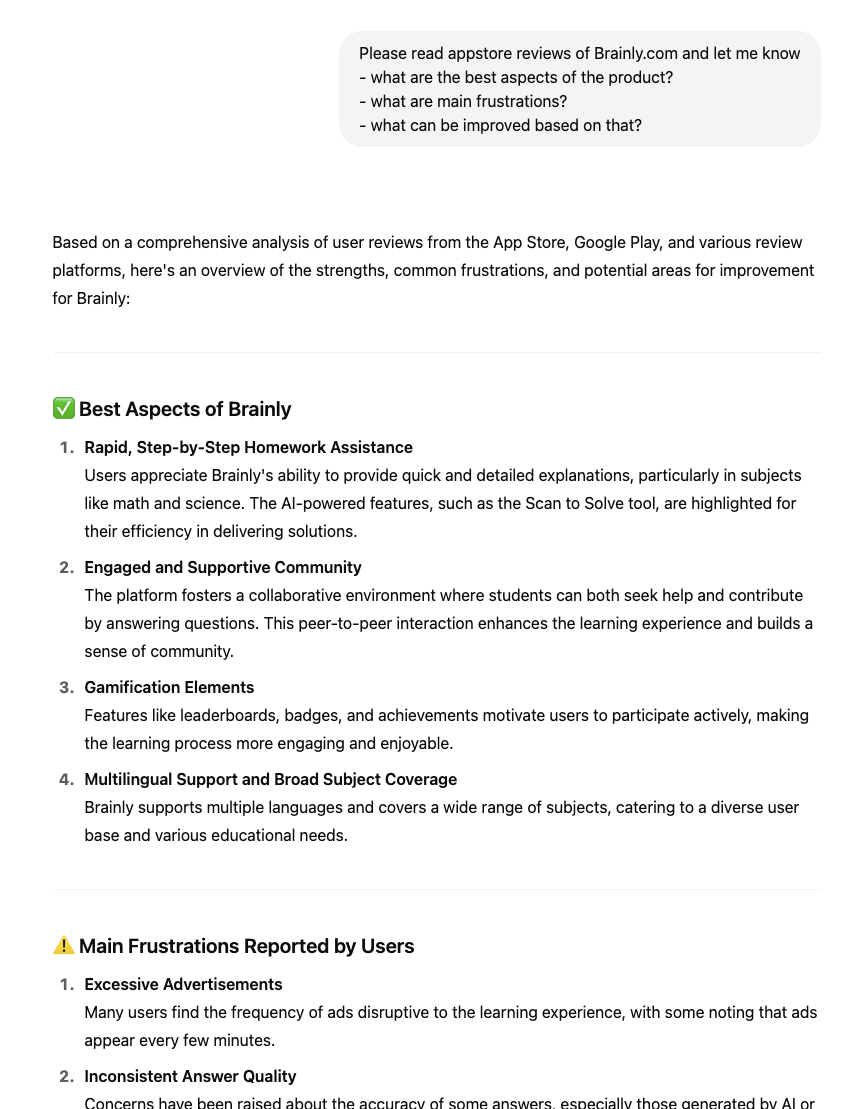

Automated sentiment analysis

You can learn a lot about users and their needs from online comments and product reviews.

Pick either your product or a competitive product and scrape relevant user comments, for example, using webscraper.io or a similar tool. Then:

- Feed them to an AI assistant

- Ask it to identify — what’s working, what’s broken, key user needs, and perceptions

Alternatively, many premium models do the scrapping themselves.

You can also feed this data into your AI persona to make it even smarter.

Rapid prototyping

Lastly, AI enables us to build prototypes and Minimum Viable Products (MVPs) more quickly, allowing us to test ideas with users faster.

Instead of building a half-baked paper prototype or investing expensive development time to build an MVP, use tools such as Figma AI and Lovable to turn a sketch into a design quickly and then design it into a working product.

AI-built products are still far from perfect, especially from a technical complexity PoV, but it’s more effective to test 10 non-perfect prototypes than one custom-made one.

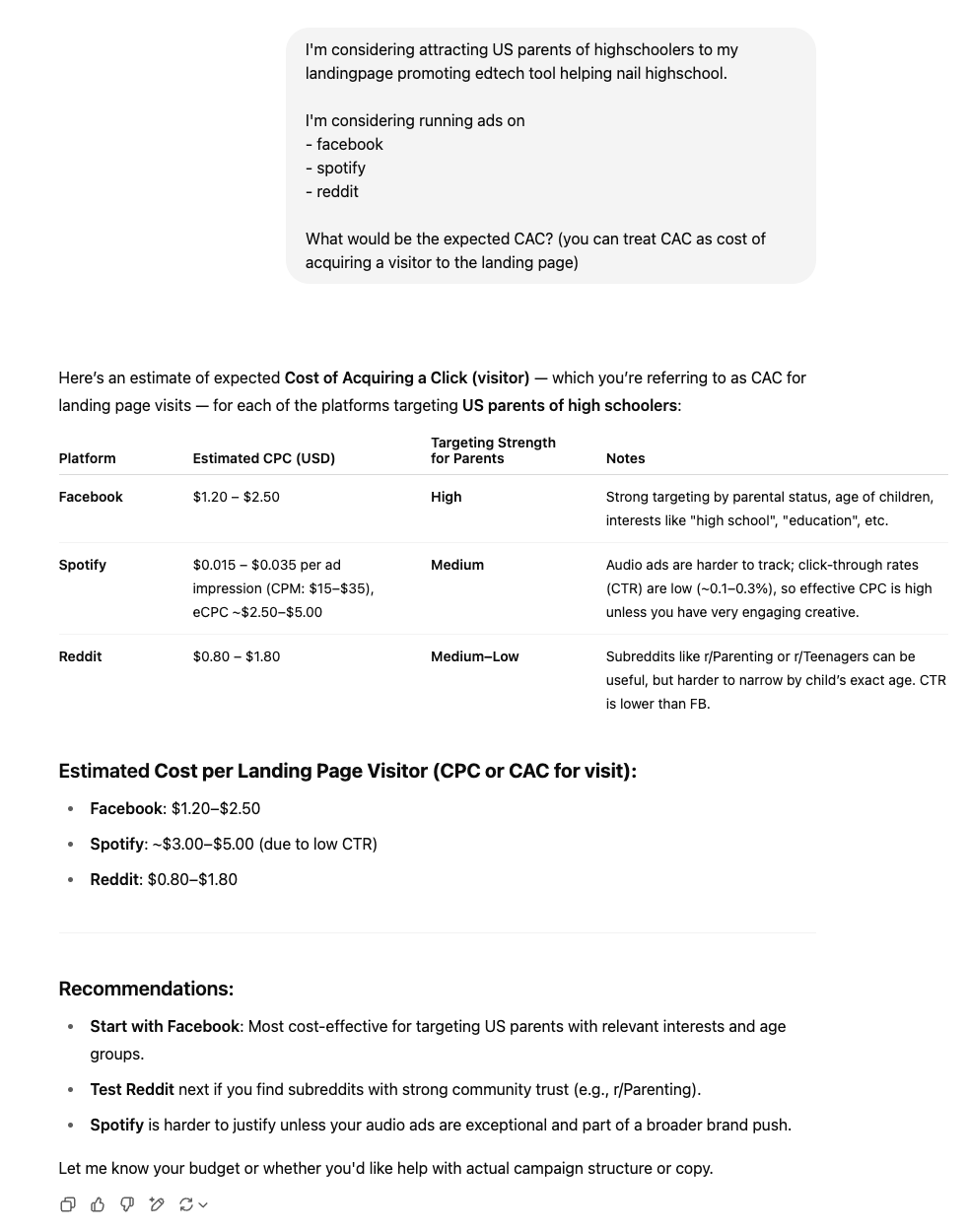

CAC and LTV estimations

Assess the economic viability of the idea by asking AI for estimates on customer acquisition cost and potential lifetime value.

Based on your description of the product and the target user segment, AI can suggest acquisition methods and keywords that may be effective, along with their current costs. It can also guesstimate how much cash you can capture from that segment for this type of product.

The more data you feed it, the better the outcome. Ideally, use your trained AI persona to come up with these estimates. Also, ensure you use the latest and most advanced models, as well as Deep Research mode (if using ChatGPT), to obtain more than a mere guess.

Although these estimates are far from perfect, they can still give you a sense of potential CAC and LTV, making it easier to compare ideas.

AI proofs of concept

Say you have a technically challenging initiative to pull off, and you are not sure if it’s even possible with your current tech stack and architecture.

Ask AI for proof of concept — that is, a technically working piece of code that solves the issue you have.

Odds are it won’t be production-ready and will require a lot of manual input and rewriting anyway, but it can give you a sense of how solvable the challenge is and what technical approaches are possible.

Heuristic evaluation and accessibility testing

Want to have a second opinion on the usability of your designs? AI is a solid partner for that as long as you ask for very standardized feedback.

Start with heuristic evaluation. Ask AI specifically to conduct a heuristic evaluation, and you’ll get a nice summary of where your designs might lack in usability.

Same for accessibility. AI can quickly check your designs (or a working product) against WCAG standards and point out where you are lacking.

It sometimes hallucinates and gives strange opinions, but as long as you don’t blindly implement its recommendations, but use it as a starting point, you should be good.

Closing thoughts

These are just a few techniques I use regularly. But AI can also:

- Score features based on user value

- Estimate dev effort

- Create new concepts from scratch

- Benchmark competitors

As you can see from the examples, you don’t need advanced prompts. Simple questions are often enough. Of course, the more context and data you provide, the better answers you’ll get, but sometimes done is better than perfect.

Just remember that AI is a great partner, not an oracle. It hallucinates. It gets things wrong. I trust it for small decisions, but for strategic ones, I treat its input as early feedback.

Still — after months of using AI to validate ideas — I can’t imagine going back. Especially when it comes to AI personas. The ability to “talk to users” anytime? Game-changer.

Let’s be real. Those who use AI will outperform those who don’t. Which one do you want to be?

The post 10+ ways AI helps me validate ideas without interviews or surveys appeared first on LogRocket Blog.

This post first appeared on Read More