What Replika gets right, wrong, and fiercely profitable

Whether we asked for it or not, chatbots are sliding into your DMs.

ChatGPT is moonlighting as a therapist, Strava-style bots are shouting split times, and Replika, an AI pal with 30 million+ accounts and a billion annual chats, can jump from text-buddy to paid-upgrade girlfriend in four taps.

Whether we asked for it or not, chatbots are sliding into roles once reserved for friends, coaches, and counsellors.

This article unpacks what that shift means for makers: how to scope a bot’s emotional role, where the legal trip-wires hide, and why Replika’s own onboarding is both a masterclass and a cautionary tale in designing feelings for profit and for people.

The loneliness backdrop

Let’s zoom out before we look into Replika’s UI.

The U.S. Surgeon General now calls loneliness as lethal as smoking 15 cigarettes a day and links it to a 29 % jump in heart-disease risk.

Mark Zuckerberg highlights that “The average American, I think, has fewer than three friends. And the average person has demand for meaningfully more, I think it’s like 15 friends or something, right?”. His fix? More chatbot buddies, naturally.

Meanwhile, Instagram’s curated highlight-reel keeps teen-girl depression on a steady incline, and NHS waiting lists leave 22 000+ people stuck 90 days or more for talk therapy.

That vacuum is exactly where chatbots arise, offering 24/7 “hey friend!” vibes with zero scheduling friction. Which brings us back to Replika: a billion annual messages, 70 pings a day per active user, and a pricing model that sells deeper intimacy for $19.99.

In a world this lonely, guardrails aren’t a nice-to-have; they’re the seatbelts on the emotional roller-coaster we’ve all just boarded.

Why we keep hugging our gadgets

We’ve always humanised tech. Half of us literally thank our voice assistants making Sam Altman from Open AI cringe and Alexa now teaches kids to say “please and “Thank you,” after parents voiced concerns that use of the technology was making their kids rude.

While this might seem like a 2025 problem, it’s not new..

Back in 1966, ELIZA, a chatbot developed by MIT tricked users into venting and sharing their worries by parroting their words. Many people swore that the program “understood” them even after they were shown the source code. Researchers discovered users unconsciously assumed ELIZA’s questions implied interest and emotional involvement in the topics discussed, even when they consciously knew that ELIZA did not simulate emotion. This became known as the ELIZA effect, a tendency to project human traits onto computer programs with a textual interface.

Brains haven’t changed. The instant software talks back, our social circuits fire. That’s Theory of Mind at work: we guess motives, feel shame when Duolingo’s owl scolds us, maybe even blush at Siri’s jokes.

Digital roles crank this up: the more intimate the role, the bigger the risks.

Roles, personas & the intimacy-risk ladder

Think of role as the relationship (assistant, coach, therapist) and persona as the costume (cheerful, snarky, Zen). Shift from assistant ➜ therapist and three things spike:

- Power — advice morphs into prescriptions.

- Data sensitivity — deeper confessions, richer behavioural logs.

- Consequence — Clippy once ruined a Word doc; mis-diagnosis ruins lives.

Add minors, health topics or addictive loops and the legal/ethical risk meter hits red.

Do therapy or friend bots actually help?

Pros exist.

Research has demonstrated that AI therapy bots could help relieve some of the syndroms of stress and anxiety. Woebot, a conversational agent appears to be a “feasible, engaging, and effective way to deliver CBT”.

NHS backlogs mean 22 000+ patients wait 90+ days for therapy. A friendly algorithm that can listen at 2 am is better than radio silence while the queue crawls.

Studies even hint AI companions ease loneliness for a week or two.

But every win comes stapled to a caution label: sensitive transcripts live on a server somewhere (hello, GDPR headaches), users routinely over-trust cheerful language, and a bot that role-plays romance can slide from comfort blanket to relationship substitute in record time. Handle with care.

Design stance: cautious optimism

A chatbot can triage, motivate, nudge, it should never masquerade as a licensed clinician or BFF. Think of chat-bots like party guests: great when they pour drinks, awkward when they start handing out life advice. The. baseline rules are:

- Choose the lightest role that solves the job. Assistant > Coach > Therapist. Stop at assistant unless you’ve got clinical staff on speed-dial.

- Wire a human exit in under 60 seconds. Self-harm cue? Abuse mention? Bounce to a live person, not another emoji.

- Throttle the chat. Session cap or soft nudge so the bot doesn’t hijack someone’s Friday night.

- Label the bot loudly. Every screen needs a little “I’m AI, not your shrink” reality check.

PAIR cheat-sheet: clip your harness before you climb ⛑️

Google’s PAIR Guidebook is a great go-to safety gear. Five principles matter when your product talks feelings:

- Design for the appropriate level of user autonomy by:

- Align AI with real-world behaviours

- Treat safety as an evolving endeavor

- Adapt AI with user feedback

- Create helpful AI that enhances work and play

Which translates to design patterns such as:

- Appropriate trust and explainability: Over-trust is as bad as bug-trust -> Sprinkle “I might be wrong” hedges into any advice.

- Right technology for the right problem: AI is better at some things than others, make sure that it’s the right technology for the user problem you’re solving.

Think of PAIR as the harness that keeps you from face-planting off the intimacy ladder. With that clipped in, we can audit a real product and see where it shines, or shears the rope.

Replika: the good, the eyebrow-raise, the -yikes-

Now let’s explore this in practice. We’ll use Replika as our living specimen.

Replika, in case it hasn’t wandered onto your homescreen yet, is the AI-companion app that promises everything from a chat-buddy on the bus to a full-blown virtual partner if you’re feeling extra. I ran through its onboarding flow with PAIR’s research lens and here’s the rapid-fire readout:

💚 Strong start

- Opens with age verification and how familiar you are with AI and how comfy you are with AI

- Then checks why you downloaded in the first place. Even asks how you’d feel about the bot evolving over time, nice expectations-setting moment.

🟡 Gets a little eyebrow-raise

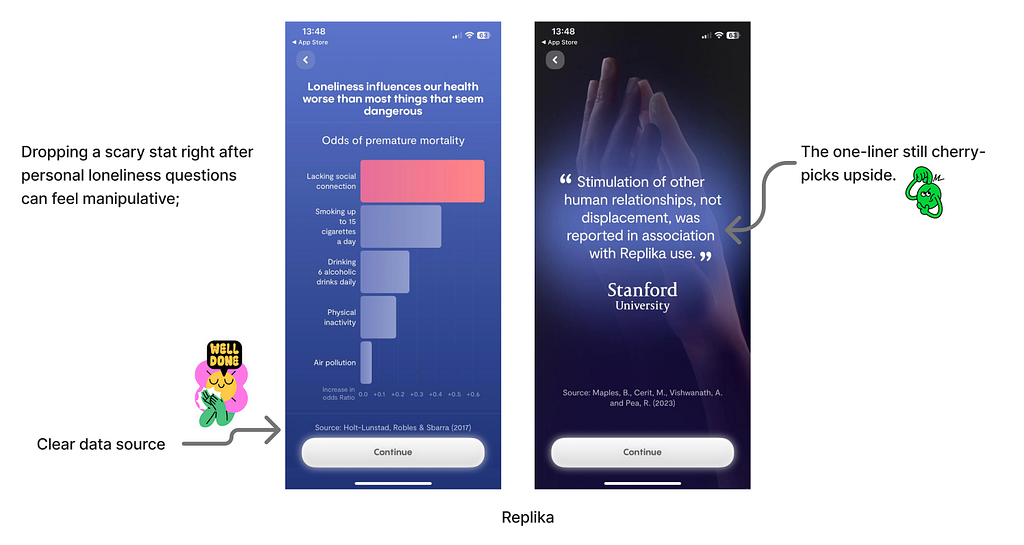

- After a couple loneliness probes it drops a doom-laden stat about isolation hurting your health more than “most dangerous things” and waves a Stanford quote that basically says “Don’t worry, Replika stimulates friendships, it never replaces them.” Feels like a gentle scare-sell.

🔴 Then the wheels wobble

- Splashy tagline: “There is no limit to what your Replika can be for you” next to a pretty AI-generated girl

- Fantasy archetype picker (“Shy librarian”, “Retro housewife”, “Beauty queen”… yikes).

- And yes, the girlfriend/wife upgrade sits behind the paywall, loneliness monetised in one tap.

Final take

Replika’s headline is shiny: 30 million sign-ups, a billion chats a year, and about $25 million in intimacy-money, but those same numbers crank the duty-of-care dial to max.

A bot that can flip from “How was your day?” to “Upgrade me to Wife” in four taps isn’t evil; it’s just proof that design choices scale feelings as fast as revenue.

So here’s a cheat-sheet:

- Stay in the lightest role that works. Assistant beats coach; coach beats wannabe therapist.

- Clip in the PAIR harness: Explain limits, hedge advice, give users a steering wheel, and test copy outside your demographic bubble.

- Wire the safety exits: Crisis keyword → human in 60 seconds, max session timer, and one-click “mute for tonight.”

- Say the quiet part out loud: Every screen: “I’m AI, not your therapist.”

Nail those four and you unlock Woebot-style wins, CBT relief in two week, without wandering into girlfriend-upgrade cringe or GDPR quicksand.

Miss them and you’re just monetising loneliness, one push notification at a time.

What Replika gets right, wrong, and fiercely profitable was originally published in UX Collective on Medium, where people are continuing the conversation by highlighting and responding to this story.

This post first appeared on Read More